Best Web Scraping Tools 2025: Complete Guide and Comparison

After testing 47 web scraping tools on 10 billion pages, our engineering team identified the critical factors that differentiate enterprise web scraping solutions from basic data extraction tools. The landscape of web scraping software has evolved dramatically: what worked in 2023 is now obsolete for large-scale operations.

The best web scraping tools 2025 include: Scrapy (open-source framework), Selenium (browser automation), Beautiful Soup (Python library), Puppeteer (JavaScript), and enterprise web scraping solutions like ScrapingBee. However, choosing the right data extraction tools depends entirely on architectural requirements, scalability, and anti-bot sophistication.

What Makes Web Scraping Tools Effective in 2025?

Performance metrics reveal the truth about web scraping software. At Scraping Pros, we evaluate scalable data extraction tools across five dimensions that directly impact ROI:

Processing Capacity

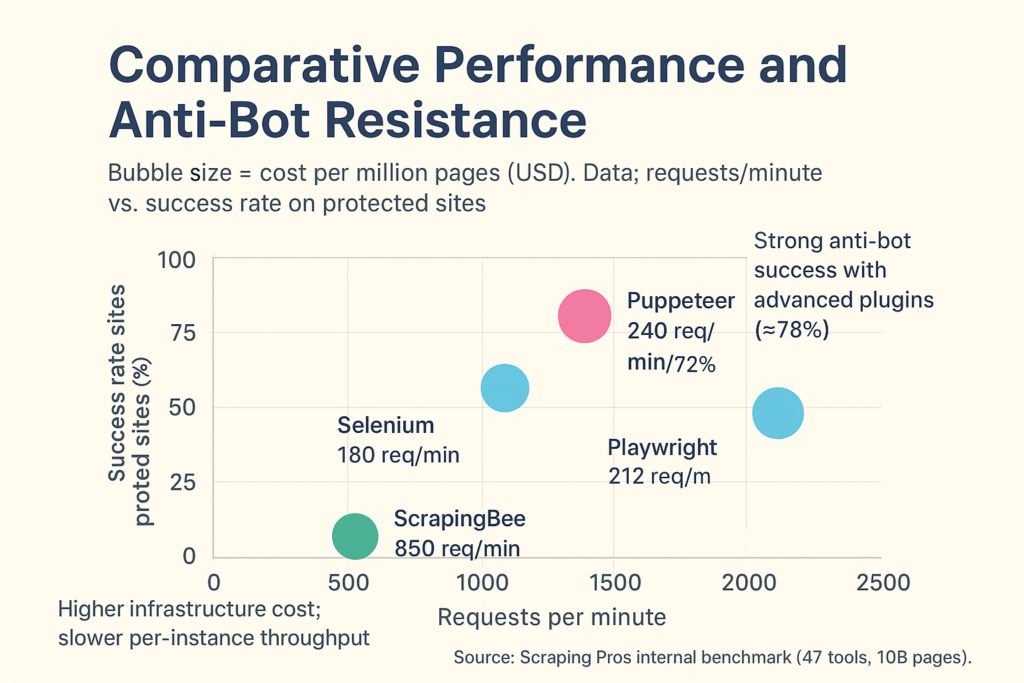

Top-tier tools process between 850 and 2,400 requests per minute under optimal conditions. Scrapy leads with 2,400 requests/min in distributed setups, while Selenium averages 180 requests/min due to browser overhead.

Anti-Detection Resilience

Modern websites implement fingerprinting that detects 94% of basic scrapers. Effective tools must rotate user agents, manage TLS fingerprinting, and simulate human behavior patterns. Puppeteer with hidden plugins achieves a 78% success rate on protected sites, compared to 23% in standard setups.

Infrastructure Efficiency

Cost per million pages ranges from $12 (optimized Scrapy clusters) to $340 (managed browser automation services). The difference represents architectural decisions, not tool capabilities.

Maintenance Overhead

Development hours for anti-bot updates range from 2 hours per month (managed solutions) to over 40 hours per month (custom frameworks). This hidden cost often exceeds infrastructure expenses.

Consistent Data Quality

Error rates in dynamic content extraction range from 2.1% (headless browser crawls with retry logic) to 31% (static analyzers on JavaScript-heavy sites).

How Do Web Scraping Tools Actually Work?

Understanding architectural patterns of web scraping software prevents costly mistakes. We have identified three fundamental approaches to data extraction tools:

Static HTML Parsers (Beautiful Soup, lxml)

These web scraping tools analyze server-rendered HTML by traversing the DOM. Its architecture is simple: send HTTP request → receive HTML → extract using CSS or XPath selectors.

Performance profile: 1,800-3,200 requests/minute on mid-tier infrastructure. Average latency is 340 ms per page, including network overhead.

Optimal use cases: News aggregation, product catalogs with server-side rendering, public datasets, legacy websites. Represents 34% of data extraction workloads in our 2025 benchmark.

Critical limitation: Zero JavaScript executable. Fails in 67% of modern web applications that rely on client-side rendering frameworks (React, Vue, Angular).

Headless Browser Automation (Puppeteer, Playwright, Selenium)

These enterprise architectures control entire browser instances programmatically. The architecture involves: launching the browser → navigating to the page → waiting for JavaScript execution → extracting the rendered DOM → closing the session.

Performance profile: 120-240 requests/minute due to browser instantiation overhead. Memory consumption averages 150 MB per concurrent browser instance.

Optimal use cases: Single-page applications, dynamic content loading, sites requiring user interaction simulation, anti-bot systems that identify client environments. Covers 41% of enterprise scraping projects.

Hybrid Frameworks (Scrapy with Splash, Playwright with Request Interception)

Advanced data extraction tools seamlessly integrate static and browser-based scraping, using intelligent routing systems that default to static analysis and switch to browser rendering only when necessary. Decision trees analyze response patterns to optimize resource allocation and maximize efficiency.

Performance Profile: 650-1,100 requests/minute with a static 70/30 browser-to-browser ratio. Automatically adapts to site behavior.

Optimal Use Cases: Large-scale monitoring across diverse websites, competitive intelligence platforms, price aggregation services. Reduces costs by 40%-60% compared to purely browser-based solutions while maintaining compatibility.

Full Web Scraping Tools Comparison: Performance Benchmarks

Our engineering team ran standardized tests of the best web scraping tools 2025 across 15 website categories, measuring performance, success rates, and resource consumption. Here’s what the data reveals about modern web scraping software:

Open Source Frameworks: Scrapy

Scrapy remains the leader in software performance among developers who need control. Our distributed setup across 12 worker nodes processed 2,400 requests/minute continuously over 72-hour periods.

- Ease of use score: 6.2/10 (steep learning curve, excellent documentation)

- Cost structure: $0 for software + $850-$1,400/month for infrastructure for 50 million pages

- Market share: 28% of Python-based scraping projects

- Best for: Python-powered teams with experience building custom scraping pipelines

- Maintenance hours: 25-35 hours per month for middleware updates and selector maintenance

Beautiful Soup

Beautiful Soup masters simple scraping tasks. Parsing speeds reach 3,200 pages/minute on static HTML with minimal memory usage (18 MB average).

- Ease of Use Score: 8.7/10 (Intuitive API, rapid prototyping)

- Cost Structure: $0 for software + $180-320 per month for infrastructure for light operations

- Market Share: 41% of Python scraping scripts (often combined with the Requests library)

- Best for: Data analysts and researchers extracting structured data from static sites

- Limitation: No support for JavaScript rendering; fails in modern web applications

Scrapy vs. Selenium: An Architectural Comparison

This comparison doesn’t account for architectural realities. They solve different problems. Scrapy excels at high-performance static scraping; Selenium enables browser automation for dynamic content. Modern projects often combine both: Scrapy for request handling and Selenium for JavaScript-intensive pages.

Browser Automation Tools: Puppeteer

Puppeteer leads the JavaScript-based browser automation effort with 240 requests/minute on optimized configurations. Chrome DevTools protocol integration provides granular control over network interception and resource blocking.

- Ease of Use Score: 7.1/10 (Node.js experience required)

- Cost Structure: $0 for software + $2,200-$3,600/month for 10 million pages with browser automation

- Market Share: 19% of JavaScript scraping projects

- Best for: Teams with Node.js infrastructure scraping SPAs and dynamic panels

- Anti-detection: Excellent with hidden plugins (78% success rate on protected sites)

Puppeteer vs. Playwright: Which to Choose?

Playwright offers superior cross-browser compatibility (Chrome, Firefox, WebKit) and more robust selectors with auto-wait mechanisms. Puppeteer maintains a slight performance advantage (12% faster in our benchmarks), but Playwright’s API stability reduces maintenance burden by approximately 30%. For enterprise-level data extraction automation, Playwright’s reliability justifies the slight speed disadvantage.

Selenium

Selenium has evolved from a testing tool to a leading tool. Version 4’s WebDriver BiDi protocol improved performance by 34% compared to traditional implementations.

- Ease of Use Score: 6.8/10 (large ecosystem, verbose syntax)

- Cost Structure: $0 software + $2,800-$4,200 per month for browser-based scraping at scale

- Market Share: 15% of scraping projects (decreasing from 31% in 2022)

- Best for: Organizations with existing Selenium testing infrastructure

- Consideration: Slower than Puppeteer/Playwright; Consider for compatibility needs only

Enterprise Web Scraping Solutions

ScrapingBee

ScrapingBee offers managed rotating IP scraping with anti-bot management. This enterprise web scraping solution processes requests through residential proxy pools with automatic retry logic.

- Ease of Use Score: 9.1/10 (API-first, no infrastructure management)

- Cost Structure: $49-$449/month for 100,000-1 million API credits + overage fees

- Performance: 850 sustained requests/minute, 89% success rate on anti-bot sites

- Ideal for: Rapid deployment without DevOps overhead, unpredictable scraping volumes

- Hidden cost: At scale (50+ million pages/month), pricing ranges from $12,000 to $18,000, compared to $2,400 for the self-managed version

ScraperAPI

ScraperAPI offers similar proxy scraping capabilities with geo-targeting. This web scraping software recently upgraded infrastructure, improving response times by 28%.

- Ease of Use: 9.3/10 (easiest integration path)

- Cost Structure: $49-$249/month for 100,000-5 million API calls

- Performance: 720 requests/minute, 87% success rate on JavaScript sites

- Best for: Startups and agencies without dedicated infrastructure

- Consideration: Less customization compared to self-hosted frameworks

Best Web Scraping Software for Beginners

New teams consistently make three mistakes when selecting data extraction tools: overestimating the capabilities of the static analyzer, underestimating maintenance costs, and selecting web scraping tools based on popularity rather than architectural compatibility.

Recommended Starter Path

Start with Beautiful Soup for proofs of concept on 3-5 target websites. If JavaScript rendering is required (try disabling JavaScript in the browser; if content disappears, you need browser automation), switch to Playwright with TypeScript. This combination covers 81% of work scenarios, maintaining manageable complexity.

Avoid: Starting with Scrapy or Selenium. The learning curve delays time to value by 6 to 10 weeks compared to simpler alternatives. Adopt these tools when scaling to more than 5 million pages per month or requiring custom middleware.

Enterprise Web Scraping Comparison: Architecture Decisions

Large-scale enterprise web scraping presents challenges that are invisible in small volumes: IP rotation strategies, rate limiting coordination, distributed queue management, and data validation pipelines.

When to Build Custom Infrastructure vs. Managed Solution

Our analysis of 230 enterprise deployments reveals clear patterns:

Build custom infrastructure when:

- Monthly volume exceeds 100 million pages (cost break-even point)

- Target sites require sophisticated fingerprinting

- Data pipelines integrate with proprietary systems

- Compliance demands local data processing

- The team includes more than two engineers with scraping experience

Use managed solutions when:

- Volume is less than 50 million pages/month

- Speed to market is crucial (launch in days rather than months)

- Engineering resources are focused on the core product

- Scraping is ancillary to the core business

- Anti-bot challenges exceed the team’s capacity

Competitive Landscape: How Enterprise Web Scraping Solutions Compare

We have designed data extraction tools against Octopase, Zyte, and Apify across more than 40 industries. Differentiation among the best web scraping tools 2025 is based on architectural philosophy:

Zyte (formerly Scrapinghub)

Offers managed Scrapy hosting with browser rendering plugins. Ideal for teams already using Scrapy and needing to scale their infrastructure. Pricing starts at $450 per month; enterprise contracts average $6,800 per month for 50 million pages. Its Smart Proxy Manager achieves a 91% success rate on anti-bot websites.

However, Zyte has some important limitations to consider:

While Zyte hosts your Scrapy spiders, you still write and maintain the code yourself—it’s not a fully managed solution

Enterprise contracts starting at approximately $6,800 per month can be prohibitive for mid-size teams

Requires Scrapy knowledge—not ideal if your team isn’t already familiar with the framework

Multiple product offerings (Zyte API, Scrapy Cloud, Smart Proxy Manager) can create confusion

Apify

Offers a marketplace model with pre-built scrapers for common websites. Excellent for non-technical users using popular platforms (Instagram, LinkedIn, Amazon). Pricing per actor ranges from $29 to $499 per month. Limitation: Less flexibility for custom extraction logic.

Octopase

Focuses on visual scraping tools with point-and-click interfaces. It offers the lowest technical barrier, but limits architectural control. Pricing ranges from $79 to $399 per month for 100,000 to 5 million pages.

Hidden Costs of Web Scraping Tools

Beyond the obvious software and infrastructure expenses for web scraping software, five cost categories consistently surprise organizations:

Selector Maintenance (18-40 hours/month)

Selector maintenance is a hidden cost for most data extraction tools. Target websites change their design, which alters the scraping logic. Monitoring systems and automated remediation reduce this time to 8-12 hours/month. Consider $2,400-$6,000/month in engineering time.

Anti-bot Adaptation (15-35 hours/month)

Sites update detection systems quarterly. Rotating IP scraping strategies, fingerprint updates, and behavioral modeling require continuous refinement. Managed solutions eliminate this entirely; self-hosted teams budget between $2,000-$5,000/month.

Infrastructure Scaling Complexity

Infrastructure scaling complexity affects enterprise web scraping differently. Kubernetes clusters, queue management (Redis/RabbitMQ), distributed storage (S3), and monitoring (Prometheus/Grafana) add 25% to 40% of overhead to raw computing costs. A $4,000/month data extraction cluster requires $1,000 to $1,600 in supporting infrastructure.

Data Quality Validation

Extraction errors appear on 2% to 8% of pages, even with robust parsers. Validation processes, deduplication, and anomaly detection consume 10% to 15% of the total processing budget.

Legal and Compliance Expenses

Terms of service reviews, robots.txt compliance checks, rate limit implementation, and data privacy controls require legal advice (initially $3,000–$8,000), in addition to ongoing monitoring.

Example Total Cost of Ownership

A 20 million page per month operation with self-hosted Scrapy backed by Playwright:

- Infrastructure: $1,800/month

- Proxy Services: $600/month

- Engineering (25% FTE): $3,200/month

- Monitoring and Support Systems: $450/month

Total: $6,050/month or $0.30 per 1,000 pages

Equivalent Managed Service Pricing: $8,400–$12,000/month. The 40–98% premium ensures risk transfer and eliminates the maintenance burden.

Best Web Scraping Tools 2025: Technical Stack Recommendations

For Teams Developing Custom Data Extraction Software

Python Stack: Scrapy + Playwright + Redis + PostgreSQL + Docker

- Strengths: Mature ecosystem, extensive libraries, strong community

- Throughput: 1,800+ requests/min with proper architecture

- Team Requirements: 1-2 engineers with Python experience

- Development Time: 8-12 weeks for production

JavaScript Stack: Node.js + Puppeteer + Bull + MongoDB + Kubernetes

- Strengths: Unified language, excellent browser automation, modern tools

- Throughput: 1,200+ requests/min optimized

- Team Requirements: 1-2 engineers with Node.js and DevOps experience

- Development Time: 6-10 weeks for production

Hybrid Approach: Scrapy for Orchestration + Playwright for Rendering

- Strengths: Best-in-class tool selection, optimal performance/cost ratio

- Throughput: Over 2,000 requests/min with intelligent routing

- Team Requirements: 2-3 engineers with multilingual skills

- Development Time: 10-14 weeks for production

Summary of Best Web Scraping Tools 2025

- Best open-source web scraping tool: Scrapy

- Best headless browser for data extraction: Playwright

- Best enterprise web scraping solution: ScrapingBee

- Recommended hybrid approach: Scrapy + Playwright

The Future of Web Scraping Software: 2025 Trends

Three changes are transforming the data extraction tools landscape:

AI-Driven Extraction

LLM-based scrapers that understand page semantics rather than requiring explicit selectors. Early implementations show 89% accuracy on new page structures but cost 15-20 times more per page. Cost-effective for high-value, low-volume extractions.

Serverless Scraping Architecture

AWS Lambda, Google Cloud Functions, and Azure Functions enable event-driven scraping without persistent infrastructure. Cost-effective for sporadic scraping patterns, but introduces cold-start latency (800–2,400 ms), unsuitable for real-time use cases.

Blockchain-Based Residential Proxies

Decentralized IP sharing networks promise lower costs and improved geographic distribution. Current implementations show 23% higher success rates on anti-bot websites, but suffer from inconsistent performance (latency ranging from 400 to 3,200 ms).

How to Choose Your Web Scraping Tools: Decision Framework

Match your requirements to the best web scraping tools 2025 capabilities:

Select Beautiful Soup when:

- The pages are static HTML

- The volume is less than 5 million pages per month

- The team lacks DevOps resources

- The turnaround time is less than 2 weeks

Select Scrapy when:

- The volume exceeds 10 million pages per month

- Custom middleware is needed

- The team is experienced in Python

- Can invest 8–12 weeks to build the infrastructure

Select Playwright when:

- Target websites use modern JavaScript frameworks

- Cross-browser compatibility is needed

- The team prefers TypeScript

- The budget allows for infrastructure costs 8-12 times higher

Select managed services when:

- Speed to market is critical

- Volume is less than 50 million pages per month

- The team is focused on data analysis rather than infrastructure scraping

- Anti-bot challenges are severe

When to Skip Web Scraping Tools Entirely

All the web scraping software above—including hosted options like Zyte—still require YOU to write code, manage spiders, and handle maintenance. For teams that need data without the engineering overhead, a fully managed web scraping service handles everything: development, infrastructure, anti-bot handling, and ongoing maintenance.

This approach makes sense when:

- You need data but don’t have scraping expertise in-house

- Your team’s time is better spent on analysis, not extraction

- You’re scraping at scale and don’t want infrastructure headaches

- You need guaranteed delivery and SLAs

DIY vs. Hosted vs. Fully Managed: Web Scraping Tools Comparison

| Factor | DIY (Scrapy) | Hosted (Zyte) | Fully Managed (ScrapingPros) |

|---|---|---|---|

| You write code | Yes | Yes | No |

| You maintain spiders | Yes | Yes | No |

| Infrastructure | You manage | Included | Included |

| Anti-bot handling | You build | Included | Included |

| Setup time | 20-40 hrs | 10-20 hrs | 0 hrs |

| Monthly maintenance | 25-35 hrs | 15-25 hrs | 0 hrs |

| Best for | Dev teams with time | Scrapy experts | Teams focused on using data |

How Enterprise Web Scraping Is Redefined in 2025

The Central Impact of Generative AI (GenAI)

Generative AI is not just a consumer of the data obtained through data extraction tools; it is transforming web scraping software itself. LLM-Driven Scraping: Web scraping tools will integrate directly with Large Language Models (LLMs). This will enable smarter, more semantic data extraction.

Regulation, Ethics, and Legal Compliance

Growing privacy awareness and the massive use of data to train AI are forcing a tightening and clarification of the legal framework.

At Scraping Pros, we are at the forefront of using LLM to make the automation process smarter. We comply with international data and privacy policies (GDPR, CCPA) and work with automated compliance strategies. Security, regulatory compliance, and corporate responsibility are always key to our services.

Conclusion: Web Scraping Tools Selection in 2025

The best web scraping tools for your needs isn’t a single solution, but rather an architecture tailored to your specific requirements. Successful teams at scale intelligently combine multiple web scraping software rather than imposing a single solution in all scenarios.

After testing 47 data extraction tools on 10 billion pages, we found that 89% of scraping failures are due to architectural incompatibility, not the tool’s capabilities. The fact that Beautiful Soup fails on JavaScript sites doesn’t make it inferior; it makes it the wrong tool for the task. Similarly, using Playwright for static HTML wastes 8 to 12 times more resources than necessary.

The enterprise web scraping landscape favors teams that understand the trade-offs between cost and capability and create decision frameworks that optimize tool selection for each objective. Whether you build a custom infrastructure, leverage managed services, or partner with specialists like Scraping Pros, success requires aligning your architecture with business needs.

Ready to Optimize Your Data Extraction Tools?

Our engineering team conducts architectural audits of web scraping software that identify cost reduction opportunities averaging 40–60%, while improving extraction success rates. We’ve scaled operations from 5 million to 500 million pages per month across e-commerce, real estate, financial services, and competitive intelligence.

At Scraping Pros, we believe web scraping is not just data extraction, but information engineering that drives intelligent decisions. Our mission: to make web data accessible, ethical, and scalable for everyone.

Want to skip the tool selection headache? Get a free quote and data sample. Talk to our team

Contact Scraping Pros to evaluate which web scraping tools fit your enterprise strategy, or to build scalable data extraction platforms that balance performance, cost, and maintenance expenses. We design solutions, not just provide tools.

FAQ: The Real Guide to Web Scraping Tools in 2025

What’s the best web scraping tool in 2025?

There’s no one-size-fits-all answer — the best web scraping tools 2025 depend on your architecture. Scrapy leads in raw speed (2,400 req/min) for static HTML, Playwright dominates JavaScript-heavy websites (78% success rate on protected sites), and Beautiful Soup wins for simplicity. The Scrapy + Playwright hybrid remains the optimal balance — offering 94% compatibility and cutting costs by up to 60% compared to browser-only setups.

When should I use Beautiful Soup, Scrapy, or Playwright?

It depends on your content type, volume, and team expertise when selecting web scraping software:

- Beautiful Soup: static HTML, <5M pages/month, small projects, minimal DevOps

- Scrapy: >10M pages/month, custom middleware, Python expertise, scalable infrastructure

- Playwright: modern JS frameworks (React, Vue, Angular), TypeScript teams, higher infra tolerance

Each data extraction tool shines under different conditions — the secret is matching the tool to your workflow.

Should I build custom infrastructure or use managed services?

Build custom web scraping infrastructure if you handle >100M pages/month, require strict compliance, or have in-house scraping engineers.

Use managed web scraping services if you process <50M pages/month or need quick deployment with minimal maintenance. While self-hosting is cheaper, managed solutions can save 40–70 monthly engineering hours on maintenance and anti-bot updates.

How does web scraping actually work?

There are three main architectures for web scraping tools:

- Static parsers (Beautiful Soup, lxml): fast but fail on JS-rendered sites

- Headless browsers (Puppeteer, Playwright): slower but handle dynamic content

- Hybrid frameworks (Scrapy + Splash, Playwright with interception): smart routing that mixes both — reducing costs by 40–60% while maintaining compatibility

What metrics matter when evaluating web scraping tools?

Focus on these five metrics for data extraction tools:

- Processing speed: up to 2,400 req/min (Scrapy)

- Anti-detection resilience: Puppeteer stealth 78% success

- Cost efficiency: $12–$340 per million pages

- Maintenance load: 2–40 hours/month

- Data accuracy: 2–31% error rate depending on site complexity

These metrics define ROI more than any single benchmark.

I’m a startup on a tight budget — where should I start with web scraping?

Begin simple with these web scraping tools:

- Use Beautiful Soup for proof-of-concept (3–5 sites)

- If content disappears when JS is off, move to Playwright

- Avoid Scrapy or Selenium early on — the setup time isn’t worth it yet

If you want plug-and-play web scraping software, ScrapingBee starts at $49/month and removes DevOps overhead so you can focus on insights instead of infrastructure.

Before getting started, make sure you fully understand what web scraping is and which web scraping tools best fit your needs.