Enterprise Web Scraping Tool Selection: Integration and Architecture Guide

Web scraping has gone from being a marginal technical practice to a core strategic capability within data-driven organizations. Today, companies that lead their markets are not simply asking which tool to use, but how to integrate web data extraction into their enterprise architecture, ensuring scalability, reliability, compliance, and business value.

This evolution marks a turning point: moving from isolated tools to scraping platforms designed to operate as critical data infrastructure.

From Basic Scripts to Enterprise Scraping Platforms

For years, scraping relied on ad hoc scripts, desktop tools, or solutions focused exclusively on “extracting HTML.” This approach worked while the data volume was limited and the use cases were not critical. However, the growth of digital marketplaces, the increasing sophistication of websites, and the need to integrate external data with internal systems have propelled scraping into a new era.

Today, enterprise web scraping faces entirely different challenges: continuous updates, multiple sources, structured and unstructured data, advanced anti-bot defenses, and, above all, the need for extracted data to be immediately available for analysis, automation, and decision-making.

This shift explains why the global market for web scraping tools and platforms maintains a trajectory of sustained growth. According to recent market research from MarketsandMarkets, the sector is driven primarily by solutions focused on integration, automation, and scalability.

If you’re just starting your journey in web scraping, check out our beginner’s guide to web scraping fundamentals to understand the basics before diving into enterprise solutions.

What Does “Enterprise Integration” Really Mean in Web Scraping?

Talking about enterprise integration is not just about connecting a tool to a database. It involves designing a continuous, governed, and observable data flow, where web scraping behaves like any other critical source of corporate information.

In an enterprise environment, scraping must:

- Feed production data pipelines, not manual processes.

- Connect with analytics systems, pricing engines, AI models, or BI tools.

- Respect security, compliance, and traceability policies including GDPR, CCPA, and other data protection regulations.

- Scale seamlessly to increases in volume or complexity.

When these conditions are met, scraping ceases to be an “external input” and becomes a natural extension of the company’s data architecture. Learn more about our enterprise integration services and how we help companies achieve this transformation.

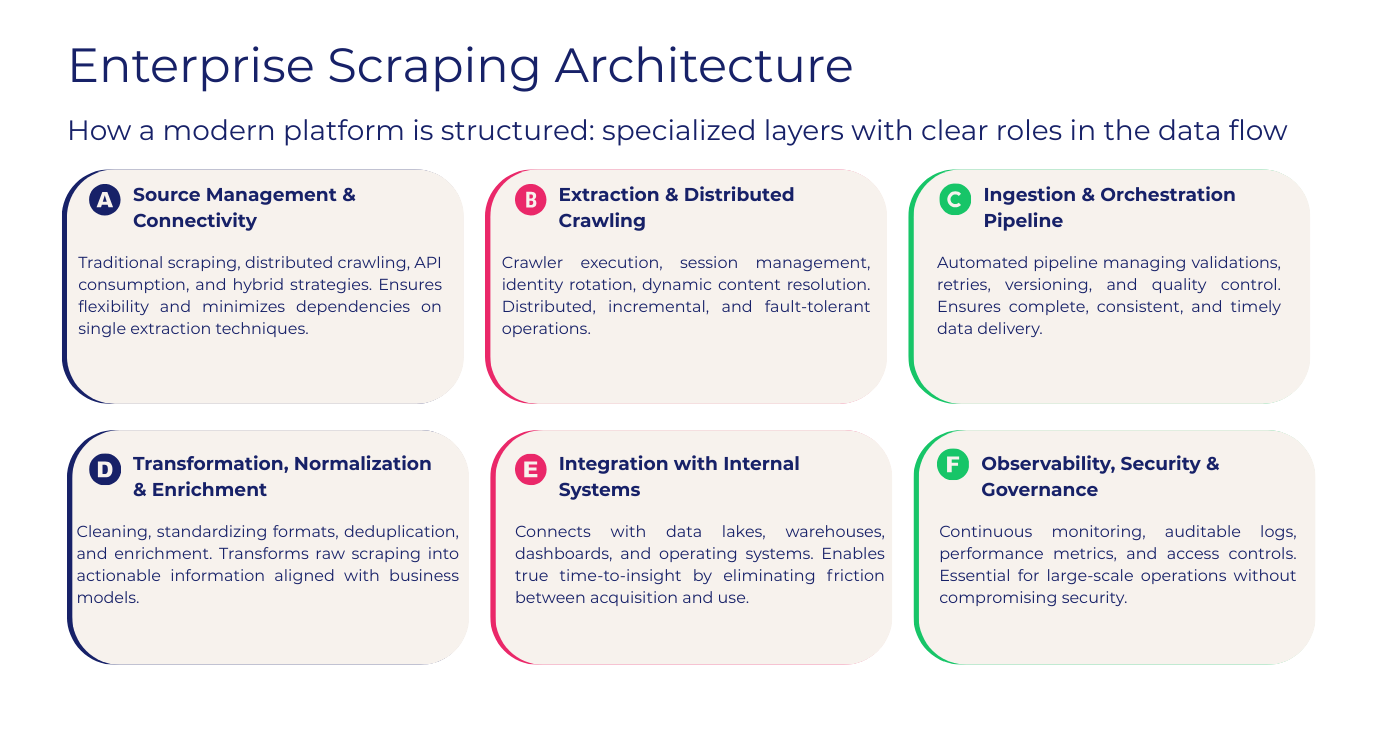

Enterprise Scraping Architecture: How a Modern Platform is Structured

A well-designed enterprise scraping architecture is not based on a single component, but rather on a set of specialized layers, each with a clear role within the data flow.

Source Management and Connectivity

This layer defines how and from where data is obtained. It includes traditional scraping, distributed crawling, consumption of public or private APIs, and hybrid API-first strategies. Its goal is to ensure flexibility in the face of changes to target sites and minimize rigid dependencies on a single extraction technique.

A good architecture allows switching between scraping and APIs without redesigning the entire system, which is crucial in dynamic environments. For a deeper understanding of when to use each approach, read our article on APIs vs Web Scraping: choosing the right strategy.

Extraction and Distributed Crawling Layer

This is where the heavy lifting happens: crawler execution, session management, identity rotation, dynamic content resolution, and frequency control. In enterprise contexts, this layer must be distributed, incremental, and fault-tolerant, avoiding unnecessary reloads and respecting access policies.

Efficiency at this stage directly impacts operating costs, stability, and data quality.

Ingestion and Orchestration Pipeline

Once extracted, the data enters an automated pipeline that manages validations, retries, versioning, and quality control. This layer ensures that the information arrives complete, consistent, and on time, even when unexpected changes occur in the sources.

Without this orchestration, scraping becomes fragile and dependent on constant human intervention. Modern orchestration platforms like Apache Airflow are essential components of enterprise scraping infrastructure.

Transformation, Normalization, and Enrichment

Web data rarely arrives ready for use. Therefore, a mature architecture includes processes for cleaning, standardizing formats, deduplication, and enrichment with internal or third-party data. This is where scraping is transformed into actionable information, aligned with the business’s analytical models.

Integration with Internal Systems

The final layer connects the scraping output with the corporate ecosystem: data lakes, data warehouses, dashboards, decision engines, or operating systems. This integration enables true time-to-insight, eliminating friction between data acquisition and strategic use.

Observability, Security, and Governance

Finally, an enterprise platform requires continuous monitoring, auditable logs, performance metrics, and access controls. This layer is essential for operating large-scale scraping without compromising security, compliance, or business continuity.

Industry leaders like Gartner’s Data Analytics research emphasize the critical importance of governance in modern data architectures.

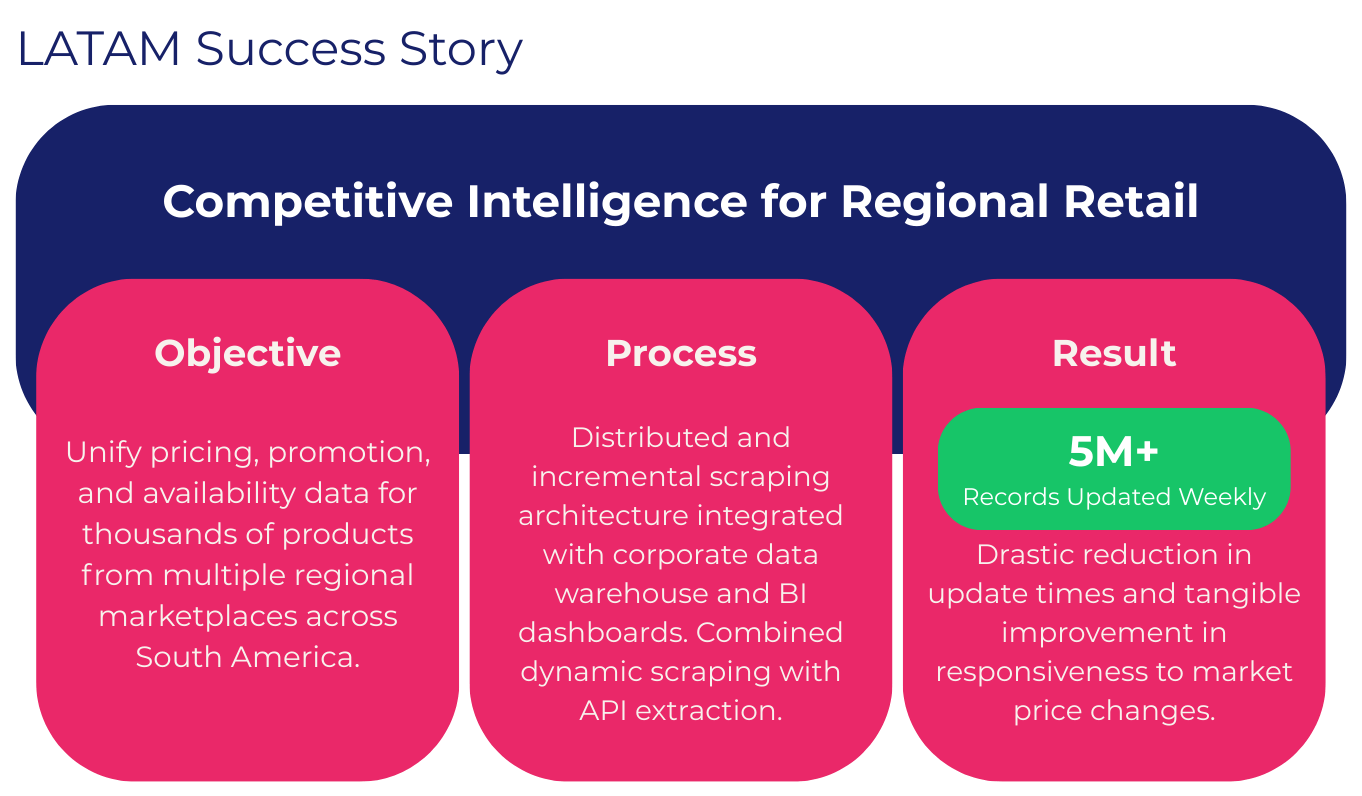

LATAM Success Story: Competitive Intelligence for Regional Retail

Scraping Pros worked with a retail group with a presence in South America that needed to improve its competitive analysis capabilities across digital channels.

Objective

To unify pricing, promotion, and availability data for thousands of products from multiple regional marketplaces.

Process

A distributed and incremental scraping architecture was designed, directly integrated with the corporate data warehouse and BI dashboards. The system combined dynamic scraping with extraction via APIs when available, optimizing costs and stability.

Result

More than 5 million records updated weekly, a drastic reduction in update times, and a tangible improvement in responsiveness to market price changes.

This type of project demonstrates how scraping, when properly integrated, directly impacts critical business decisions. Explore more success stories from our clients across different industries.

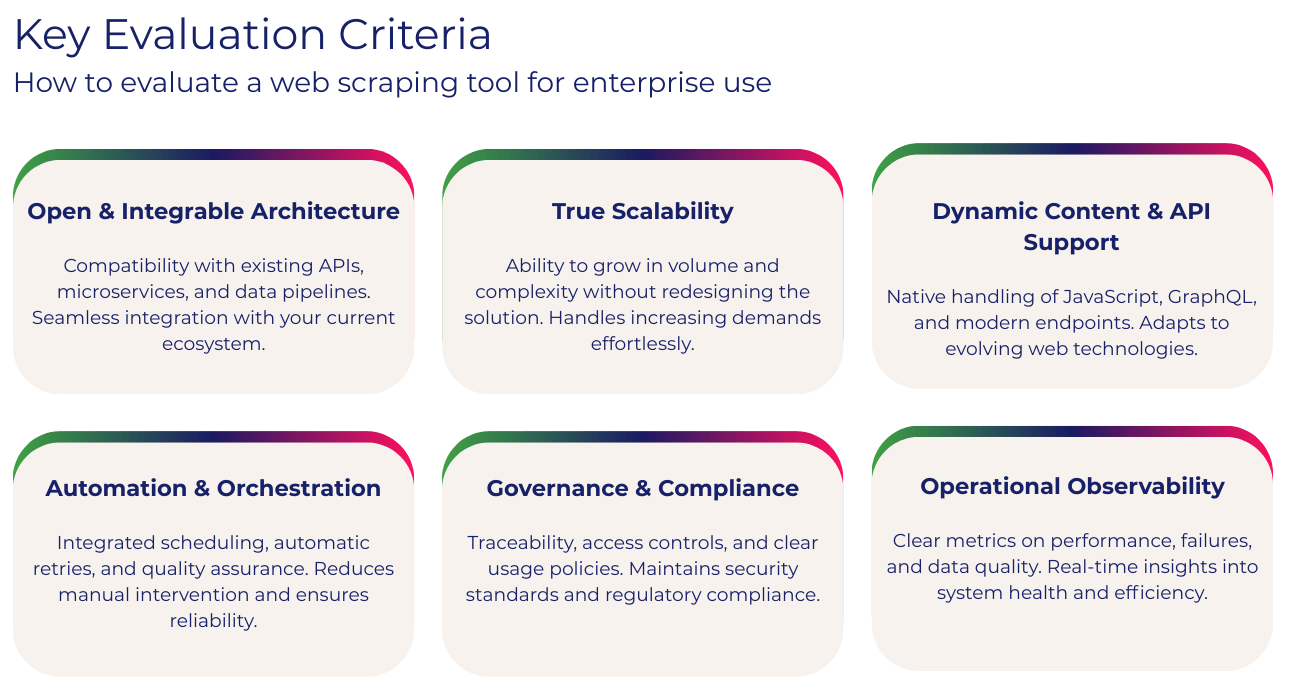

How to Evaluate a Web Scraping Tool for Enterprise Use

Choosing the right tool requires going beyond marketing or superficial comparisons. In enterprise environments, the evaluation should focus on integration capabilities, scalability, and resilience.

Key Evaluation Criteria

- Open and integrable architecture: compatibility with existing APIs, microservices, and pipelines

- True scalability: ability to grow in volume and complexity without redesigning the solution

- Support for dynamic content and APIs: native handling of JavaScript, GraphQL, and modern endpoints

- Automation and orchestration: integrated scheduling, retries, and quality assurance

- Governance and compliance: traceability, access controls, and clear usage policies aligned with modern data governance frameworks.

- Operational observability: clear metrics on performance, failures, and data quality

An enterprise tool is not measured solely by how much data it can extract, but by how well that data is integrated and transformed into value for the organization.

Need help choosing the right solution? Schedule a consultation with our enterprise scraping experts.

Trends Toward 2026–2027: Scraping as Critical Infrastructure

The global web scraping software market continues to expand. According to industry projections, the market size, which reached USD 162.85 million in 2025, will grow to approximately USD 173.95 million in 2027, with compound annual growth driven by automation, cloud integration, and AI capabilities.

Furthermore, other sources indicate that the adoption of integrated and automated solutions exceeds 70% in companies focused on real-time analytics, solidifying data scraping as an integral part of corporate data infrastructure.

These trends demonstrate that integration and scalability will continue to be key factors in the selection of data tools and platforms in the coming years. Stay updated with our latest insights on web scraping trends and emerging technologies.

Conclusion: From Tactical Scraping to Sustainable Competitive Advantage

The evolution of web scraping tools reflects a deeper transformation: how organizations use external data to compete. In this new landscape, integration and architecture matter as much as the extraction itself.

Companies that adopt scraping platforms designed to integrate, scale, and govern data intelligently will be better positioned to anticipate markets, optimize decisions, and build sustainable competitive advantages.

At Scraping Pros, we design enterprise scraping solutions that not only extract data but also integrate natively into the business architecture, transforming web information into actionable intelligence on a global scale.

Frequently Asked Questions (FAQs)

What is an enterprise web scraping tool?

An enterprise web scraping tool is a scalable, integrable platform designed to extract, process, and deliver web data as part of an organization’s core data infrastructure, not as an isolated utility.

How is enterprise web scraping different from basic scraping tools?

Basic tools focus on extracting HTML from individual sites, while enterprise web scraping integrates distributed crawling, orchestration, governance, and direct connections to BI, AI, and operational systems.

What does enterprise integration mean in web scraping?

Enterprise integration means that scraped data flows automatically into corporate pipelines, data warehouses, analytics platforms, and decision systems, with full observability, security, and compliance controls.

When should a company move from basic scraping to an enterprise scraping platform?

Organizations should migrate when scraping becomes business-critical: high data volumes, frequent updates, multiple sources, anti-bot challenges, or the need for real-time analytics and automation.

What are the key components of an enterprise scraping architecture?

Core components include source management, distributed extraction, ingestion pipelines, data transformation, system integration, and layers for observability, security, and governance.

Can enterprise web scraping integrate with APIs and internal systems?

Yes. Modern enterprise scraping platforms combine API-first strategies with scraping and integrate directly with data lakes, warehouses, BI tools, pricing engines, and AI models.

Is enterprise web scraping compliant with data protection regulations?

When properly designed, enterprise scraping platforms include compliance frameworks that support regulations such as GDPR, LGPD, and CCPA through auditing, access controls, and data governance policies.

How do companies evaluate a web scraping tool for enterprise use?

Evaluation should focus on integration flexibility, scalability, automation, support for dynamic content and APIs, observability, and the ability to align scraping outputs with business objectives.

Why is observability important in enterprise scraping platforms?

Observability enables teams to monitor performance, detect failures, audit data flows, and ensure data quality at scale, transforming scraping into a reliable production system.

How does enterprise web scraping create competitive advantage?

By delivering timely, accurate, and integrated external data, enterprise scraping enables faster decisions, improved pricing strategies, better market intelligence, and scalable analytics capabilities.