Is your data team spending more time fixing broken scrapers than analyzing insights? You’re not alone. Companies operating at petabyte scale face the same challenge: traditional web scraper systems that collapse with minimal website changes.

The solution isn’t more servers. It’s adaptive intelligence.

In a digital ecosystem growing exponentially, the limits of traditional web scraping are no longer sufficient. Global companies—from retailers to fintechs—need to extract, process, and analyze information at petabyte scale while maintaining accuracy, adaptability, and ROI.

At Scraping Pros, we understand that scraping performance is no longer measured solely by speed or volume, but by adaptive intelligence, model efficiency, and predictive data extraction optimization.

1. The Challenge: Why Traditional Web Scraper Systems Fail at Petabyte Scale

Until recently, data teams operated under simple logic: more servers, more scraping. But that equation collapses when facing millions of dynamic pages, asynchronous JavaScript, and increasingly sophisticated anti-bot defenses.

Today, the key question is: How do you maintain web scraper accuracy and consistency when the environment changes hourly?

This is where the concept of intelligent scraping performance emerges: automation combined with machine learning models that anticipate errors, retrain selectors, and optimize crawling paths without human intervention.

“The performance of a modern scraper depends not only on the code, but on its ability to learn from web behavior and optimize data extraction.”— Scraping Pros R&D Team

2. The Solution: Best Web Scraper Performance Optimization with ML

What Petabyte-Scale Data Extraction Optimization Really Means

Working at petabyte scale means operating at a level where traditional scraping strategies are no longer viable. It’s not just about collecting information—it’s about processing, cleaning, and classifying billions of records from multiple sources in real time to achieve greater scraper efficiency.

Scraping Pros has developed distributed extraction pipelines that combine machine learning web scraping, intelligent deduplication, and parallel orchestration systems, capable of handling datasets between 1 and 3 PB per month in global e-commerce, price intelligence, and trend monitoring projects.

Real-World Impact: Two Petabyte-Scale Case Studies

International Retailer Case

One project for an international retailer required continuous collection of prices, availability, and reviews for more than 120 million products distributed across 45 countries. The system had to automatically adapt to HTML changes, local languages, and different time zones.

Using ML-based semantic pattern detection models and efficient scraping algorithms, scrapers identified repetitive structures and optimized parsing, reducing computational costs per page by 37% and accelerating the delivery of useful data in near real time for better scraper efficiency.

Financial Intelligence Case Study

Another petabyte-scale case occurred in the financial intelligence sector, where heterogeneous sources (reports, open APIs, scraping of press releases, and regulatory databases) were integrated. The challenge wasn’t just extraction but data consolidation and versioning. Scraping Pros’ ML pipelines unified more than 900 TB of historical information and 2 PB of new data in a 60-day period, with an error rate of less than 0.02%.

3. Machine Learning at the Core of Web Scraping Performance

At Scraping Pros, we incorporate machine learning web scraping models into critical stages of the process: data selection, change detection, URL prioritization, and blocking mitigation.

a. Smart Selectors: AI-Powered Data Extraction Optimization

While traditional systems rely on fixed rules or static XPaths, our approach uses AI selectors based on models such as GPT-4 and Claude. These interpret the semantic context of HTML, automatically adjust selectors, and reduce extraction errors by up to 40%.

b. Predictive Scraping for Automated Data Collection

Our predictive scraping models analyze historical blocking and scraping performance patterns to anticipate problems in automated data collection. For example, if a site changes its layout every two weeks, the system proactively adapts. This reduces maintenance times and avoids critical interruptions in the data chain.

c. Resource Optimization with Adaptive Scraping Algorithms

Thanks to adaptive throttling scraping algorithms, our web scraper adjusts request frequency based on server load, reducing the risk of detection and optimizing network costs by up to 22%.

The result: More stable, resilient, and economically sustainable pipelines with superior scraper efficiency.

4. Adaptive Infrastructure: Scraping as a Living System

Unlike monolithic architectures, Scraping Pros builds modular and self-adjusting infrastructures. Each project behaves like a living system, learning from traffic, content type, and business context.

This allows the platform to auto-scale when detecting spikes in demand or when greater JavaScript rendering capacity is required for optimal scraping performance.

a. The 4-Layer Intelligent Pipeline for Data Extraction Optimization

Extraction Layer: Distributed agents with AI Selectors and dynamic rendering

Validation Layer: Real-time anomaly detection and self-retraining

Storage Layer: Embedding-based deduplication and ML compression

Delivery Layer: Normalization and REST/GraphQL API with version control

b. Intelligent Anti-Bot Technology for Web Scraper Efficiency

While other providers rely on rotating proxies or manual solutions, our ML agents analyze behavioral signals (headers, timings, scroll patterns) in real time and automatically adapt their fingerprint.

This technology achieves a 94% anti-bot bypass rate, one of the highest in the industry for web scraper performance.

c. Accelerated JavaScript Rendering

With renderers distributed in specialized containers, we achieve average speeds of 2.3 seconds compared to 3.8–4.1 seconds for main competitors.

This directly translates into more pages per hour and faster time-to-insight for customers seeking the best web scraper performance optimization.

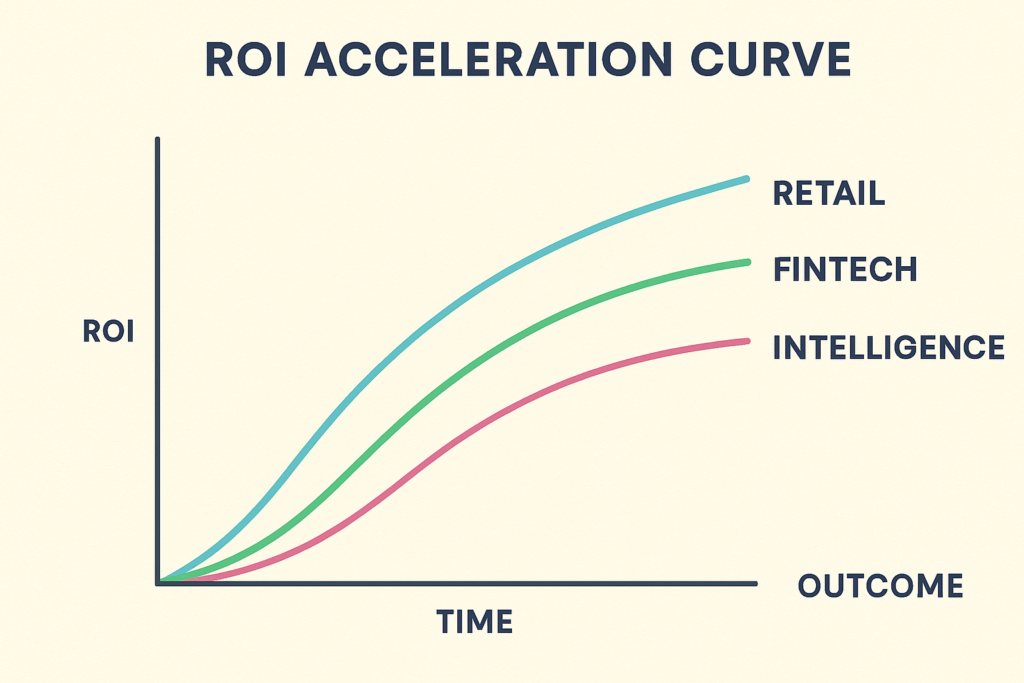

5. From Technical Performance to Business Value: The Time-to-Value Framework

Technical scraping performance is meaningless if it doesn’t translate into economic impact. That’s why at Scraping Pros, we design each data extraction optimization project based on a measurable Time-to-Value model.

The 4-Phase Framework for Data Extraction Optimization

| Phase | Duration | Key Result | Success Metric |

|---|---|---|---|

| Discovery | 3–5 days | KPIs and quick-wins identified | >3 quick-wins identified |

| MVP | 10–15 days | First actionable dataset | First data-driven decision made |

| Monetization | 20–30 days | Data-driven decisions | Visible ROI breakeven path |

| Scaling | 45–60 days | Positive ROI documented | >120% ROI confirmed |

Case Study: Retail Pricing Intelligence with ML Web Scraping (LATAM)

Client: Top 5 retailer in Mexico with 2,400 physical stores + e-commerce

Challenge: Monitor prices of 12M SKUs daily across 23 competitors for dynamic pricing

Discovery Phase (4 days)

- Identified that 89% of pricing changes occurred between 8–11 a.m. and 7-9 p.m.

- 67% of SKUs had stable prices for >7 days (did not require daily monitoring)

- Quick-win: Focus on 33% of volatile SKUs = 67% reduction in scope

MVP Phase (12 days)

- Scraped 4M SKUs/day (critical subset) from 8 main competitors using automated data collection

- 94% accuracy in price extraction

- Detection of 12,400 pricing changes/day

- First automated pricing reaction: day 13

Monetization Phase (28 days)

- Full system operational: 12M SKUs, 23 competitors

- Integration with pricing engine via API

- Measured result: 28% reduction in “non-competitive price” incidents

- Revenue impact: Additional $2.4M in Q4 2024 attributed to better pricing

Scaling Phase (55 days)

- Added predictive pricing: ML model anticipates competitor moves

- Expanded to 2 additional countries (Colombia, Chile)

- Calculated ROI: $180,000 investment vs. $4.2M value generated in 6 months = 2,333% ROI

The Technical Detail That Made the Difference

We implemented a pricing change detection system that not only scraped prices but also calculated the actual discount percentage (comparing it to the list price, identifying fake promos “previously $X, now $Y” where X was never the actual price). This insight generated an additional $840,000 versus simply matching competitor prices.

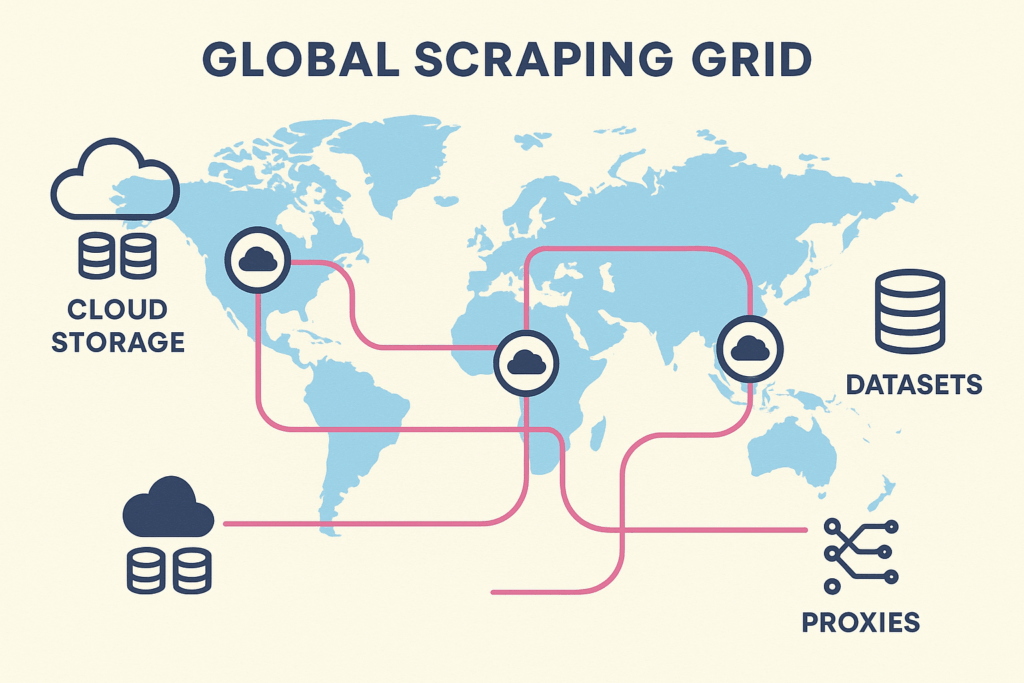

6. Global Scraping Performance: Intelligent Geodistribution

In an international scraping environment, latency and geographic restrictions can be the most critical scraping performance factors.

Scraping Pros employs distributed nodes operating from multiple regions (the Americas, Europe, and Asia) with balancing algorithms that dynamically allocate resources based on load and geolocation.

This model reduces latency by an average of 35% compared to centralized architectures and enables compliance with local data protection regulations.

Regional Intelligence for Better Scraper Efficiency

The ML models also incorporate geofeatures, learning which regions offer greater stability or shorter response times for optimal web scraper performance.

In projects for LATAM—for example, monitoring regional marketplaces and catalogs—the platform adapted its models to local dialects, detecting linguistic variations (“shirt” vs. “t-shirt”) and improving the quality of the final dataset.

This linguistic customization, often invisible, makes the difference between simple extraction and truly contextual data intelligence.

7. Real-World Use Cases: From Theory to Impact

The value of intelligent scraping powered by machine learning web scraping is measured in concrete results:

- Retail (LATAM): Monitoring of 12 million SKUs daily with automatic change detection using automated data collection, 28% reduction in pricing errors

- Fintech (Europe): Automated extraction of rates and credit terms in real time, improving reporting agility by 40%

- Market Intelligence (US): Predictive tracking of e-commerce trends, with models that anticipate product launches

These cases show that scraping powered by ML is not just extraction, but applied intelligence for superior data extraction optimization.

The Future of Web Scraping: Interoperability and Responsible AI

Next-generation web scraper systems will integrate three vectors:

A) Continuous Learning: Models that retrain themselves and adapt their logic to environmental changes for improved scraping performance

B) Semantic Interoperability: Integration of web data with metaverses, private APIs, and IoT ecosystems

C) Ethical Scraping: Compliance with terms of service and transparency in automated data collection

At Scraping Pros, we work to ensure that AI not only improves scraping performance but also data trust and traceability.

“The future of scraping isn’t about more speed. It’s about more intelligence, context, and ethics in every byte.”- Scraping Pros Global Team

Conclusion: Web Scraper Performance Redefined

Web scraping performance is no longer defined by how many pages are downloaded, but by how quickly and accurately data is converted into business value.

Scraping Pros is leading this change with a unique combination of machine learning web scraping, intelligent automation, and strategic vision for best web scraper performance optimization.

Every pipeline holds a promise: cleaner data, faster decisions, and more efficient operations through superior scraper efficiency.

Ready to Optimize Your Data Extraction?

Extracting more than 100M pages per month? Schedule a free technical audit of your web scraper infrastructure and discover how ML-powered data extraction optimization can reduce costs and accelerate your time-to-insight.

Web Scraper Performance & ML Optimization: FAQ

1. What is web scraping performance optimization?

It is the process of improving the speed, accuracy, and stability of scrapers using machine learning techniques, network optimization, and automatic adaptability to site changes for better scraper efficiency.

2. How does machine learning help improve scraping?

ML models detect patterns, predict crashes, and automatically adjust selectors through scraping algorithms, reducing errors and manual maintenance in automated data collection.

3. What sets Scraping Pros apart from its competitors?

Scraping Pros combines state-of-the-art AI, modular infrastructure, and transparent ROI metrics, delivering faster and more stable results with superior web scraper performance at a lower cost.

4. How long does it take for a project to show value?

On average, less than 15 days. Our Time-to-Value methodology prioritizes incremental results and actionable datasets from the MVP phase for rapid data extraction optimization.

5. Does Scraping Pros operate globally?

Yes. We manage projects in Latin America, Europe, and North America, with support across multiple time zones and experience in diverse regulatory contexts for ML web scraping.

6. Which industries benefit most from intelligent scraping?

Retail, fintech, market intelligence, academic research, and data companies that need to monitor dynamic or sensitive information with automated data collection.

7. How does Scraping Pros ensure ethical and legal data extraction?

We implement compliance protocols (robots.txt, frequency limits, data anonymization) and internal transparency audits for responsible web scraper operations.