Discover the 13 best data pipeline tools for your business and explore web scraping services as an alternative to make your data valuable for business intelligence.

In the fast-paced world of data analytics, the ability to pipe data efficiently and reliably is more important than ever. As we move into 2025, organisations are looking for solutions that can not only handle large volumes of data, but also offer flexibility, scalability and ease of use. This article explores the leading data pipeline tools that are leading the way in this ever-evolving landscape.

Whether for business intelligence, machine learning or real-time analytics, a robust data pipeline is essential for turning raw data into valuable insights.

Today, data ingestion is the first step in processing data and extracting value from the vast amounts of data that organisations collect today. Data ingestion is typically defined as the process of collecting and importing raw data from various sources into a centralised storage system (such as a data warehouse, data lake or database) for analysis and use. It is an essential component of decision making and insight

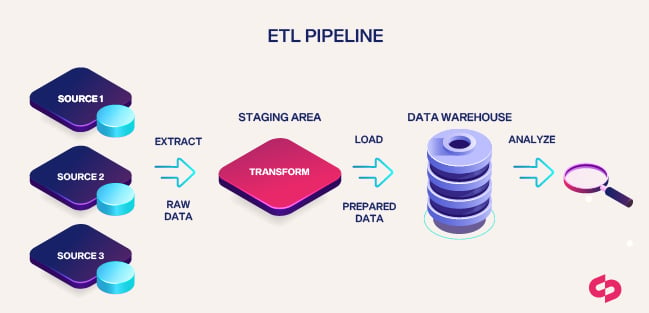

The process typically includes extraction, transformation (optional in basic ingestion, but central to ETL/ELT) and loading (ETL). There are two main ingestion modes: batch (at scheduled intervals) and real-time (streaming) with a continuous flow of data.

Ingestion tools must be able to handle a variety of sources, including structured data (databases, spreadsheets), semi-structured data (JSON, XML), unstructured data (text documents, images, social media), and streaming data (IoT, web applications).

These tools are critical for efficiency, improved data quality, real-time insights, centralised data access, scalability and information security.

Best Data Pipeline Tools

We present the top 13 data pipeline tools with their different features:

- Scraping Pros: Managed service that provides data extraction feeds directly into your workflows and operations.

- Integrate.io : This is an easy-to-use, no-code data pipeline platform with ETL, ELT and reverse ETL capabilities and over 130 connectors. It stands out for its simplicity and automation.

- Airbyte: This is an open source data integration platform that allows you to create ELT pipelines. It offers over 300 out-of-the-box connectors and the ability to build custom connectors.

- Amazon Kinesis : This is an AWS service for real-time processing of large-scale streaming data. It integrates with other AWS services and offers different components for video, data streams, firehose and analytics.

- Matillion : This is a cloud-based data integration and transformation platform designed for cloud data warehouses. It offers a visual interface, hundreds of connectors, and advanced transformation capabilities.

- Apache NiFi : Open source platform for routing, transforming and mediating data between systems. It is schema-free and provides visual control of data flow.

- Snowflake : Snowflake’s native data pipelines integrate directly into its ecosystem for common data integration scenarios.

- Talend : Comprehensive data ingestion and management tool that combines integration, integrity and governance in a low-code platform. Flexible for cloud or on-premises deployment.

- Dropbase : Cloud platform for extracting, transforming and loading data from CSV files and spreadsheets into platform-managed SQL databases.

- AWS Glue : Fully managed ETL service on AWS, with integration with other AWS services and support for batch and streaming processing.

- Google Cloud Dataflow : Google Cloud’s serverless data processing service for highly available batch and streaming processing.

- Microsoft Azure Data Factory : This is a Microsoft ETL and data integration service with a no-code interface and deep integration with the Azure ecosystem.

- StreamSets Data Collector : Now part of IBM, this is a data ingestion platform focused on real-time data pipelines with monitoring capabilities.

Company Selection Criteria

Choosing the right tool is a strategic decision that should be based on a clear understanding of the organisation’s specific needs, its team’s capabilities and its long-term goals for data management and analysis.

When selecting a data pipeline platform that’s right for their needs, organisations should consider several key criteria. These criteria will ensure that the chosen platform meets their long-term technical, business and operational requirements.

Here are some of the key criteria to consider:

- Reliable data movement: The platform’s ability to move data consistently and accurately is critical. The best platforms guarantee zero data loss, handle failures gracefully and maintain clear data lineage tracking.

- Real Scalability: The platform must be able to handle growth not only in data volume, but also in pipeline complexity. This includes the ability to scale both processing power and pipeline complexity.

- Practical monitoring: It’s critical that the platform provides real-time monitoring, detailed logs and automated alerts to quickly identify and resolve problems.

- Integrated security: Security can’t be an afterthought. Modern platforms must provide end-to-end encryption, granular access controls and comprehensive audit trails to meet compliance requirements.

- Effective cost control: The best platforms help manage costs without sacrificing performance. Look for pay-as-you-go pricing and tools that automatically optimise resource usage.

- The Total Cost of Ownership (TCO), which includes operating costs, required staff expertise, training needs and infrastructure requirements, must be carefully evaluated.

- Integration flexibility: The platform should integrate easily with the organisation’s existing technology stack. This includes robust APIs, pre-built connectors for common sources, and the ability to build custom integrations. It’s important to ensure that the tool supports the necessary data sources and destinations.

- Data transformation and integration capabilities: Evaluate the tool’s data cleansing, transformation and integration capabilities. Look for features that simplify complex data mapping, merging and handling of different data types.

- Ease of use and learning curve: Consider the tool’s user interface, ease of configuration and usability. Intuitive interfaces, visual workflows and drag-and-drop functionality can streamline pipeline development and management. It’s also important that the platform matches the skills of the team.

- Support for real-time or batch processing: Determine whether the tool supports the company’s preferred data processing mode and whether it’s suitable for its pipeline needs (real-time streaming or batch processing).

- Monitoring and alerting capabilities: Verify that the tool offers comprehensive monitoring and alerting capabilities that provide visibility into the status, performance and health of pipelines, including logs, metrics, error handling and notifications for efficient troubleshooting.

- Security and compliance measures: Ensure that the tool provides robust security measures such as encryption, access controls and compliance when handling sensitive or regulated data.

- Integration with existing infrastructure: Evaluate how well the data pipeline tool integrates with your current infrastructure, including data storage systems and analytics platforms. Seamless integration can save time and effort in setting up and maintaining the pipeline.

- Level of support and documentation: Evaluate the level of support and availability of documentation from the tool vendor. Look for comprehensive documentation, user forums and responsive support channels to assist with troubleshooting. The vendor’s stability in the market should also be considered.

- Speed of implementation: Consider how quickly you need to be up and running. Some platforms offer faster time-to-value but may sacrifice customisation options. Others require more time to set up but offer greater flexibility.

- Data quality: Some tools offer built-in data quality management capabilities.

- Operational efficiency: Tools that offer automation and orchestration of complex workflows can improve operational efficiency and reduce the risk of human error.

Ultimately, the “best” platform will be the one that fits the specific needs, budget and skills of the organisation’s team, without being distracted by unnecessary features or market hype.

In general, automated tools offer significant benefits such as efficiency (simplifying the ingestion process and reducing manual effort), improved data quality (by incorporating validation and cleansing processes during ingestion), real-time insights, centralised access, scalability, security and cost reduction.

Several key factors influence the selection of ingestion tools and data pipelines. These include reliability of data movement, scalability, monitoring capabilities, security, total cost of ownership (TCO) and pricing model, integration flexibility, data transformation and integration capabilities, ease of use and learning curve, support for real-time or batch processing, vendor support and documentation, speed of implementation, and regulatory compliance.

As we’ve said, your choice should be based on a clear understanding of your organisation’s specific needs, your team’s skills and your long-term goals for data management and analysis.

Web scraping as an alternative way to improve business intelligence

Web scraping is an advanced technique for extracting information and data from websites. It automates data collection and transforms it into structured, easy-to-analyse data. It is a readable and analysable format, like a local file or spreadsheet. It is an efficient alternative to manual copy and paste, especially when dealing with large amounts of data.

In many cases, it can be an important alternative to data pipelines and ETL when extracting large volumes of data and converting it into easily analysable and visualisable formats.

The key competitive advantages of web scraping include

- Competitive intelligence: Track competitors’ prices, product offerings, marketing strategies and customer reviews to gain market and competitive insights.

- Price optimisation: Collects pricing data from multiple sources for pricing analysis and dynamic pricing strategies, enabling companies to competitively adjust prices and maximise profits.

- Lead Generation: Extracts contact information from websites, directories and social media platforms to create lists of potential customers for targeted marketing and outreach.

- Investment decision making: Collects historical market and financial data to perform trend analysis, identify investment opportunities and assess potential risks.

- Product Optimisation: Collects and analyses customer reviews, comments and opinions to gain insight into customer preferences, pain points and expectations, enabling product improvements and new product development.

- Product and category development: Identifies popular products and categories by extracting data from competitor sites, helping companies refine their offerings and maximise sales.

- Product data optimisation: Collects product data from multiple sources to ensure accuracy, completeness and consistency of product listings, improve SEO efforts and enhance the customer experience.

- Marketing Strategy: Extract data from social media, forums and other online platforms to analyse customer sentiment, identify trends and effectively adapt marketing strategies.

Need more information about our web scraping services? At Scraping Pros, we can provide you with the techniques, experience and resources you need to manage your data effectively, reliably and ethically.