Introduction: Hybrid API Scraping as Competitive Advantage

Hybrid API scraping has become the defining data strategy for enterprise organizations in 2026. This approach combines official APIs with strategic web scraping to create resilient, comprehensive data extraction systems that outperform single-method architectures.

In today’s digital economy, competitive advantage depends not only on data access but also on how data is integrated, governed, and scaled. While APIs offer structured access and web scraping provides presentation-layer intelligence, neither approach alone delivers complete market coverage. Hybrid API scraping bridges this gap by dynamically orchestrating multiple data sources under unified logic.

Leading organizations no longer debate “API vs. web scraping”—they architect systems where REST and GraphQL APIs serve as primary endpoints, while distributed crawling and selective scraping provide enrichment, validation, and fallback mechanisms. The result is maximum coverage, reliability, and speed of response to market changes.

At Scraping Pros, we design these hybrid architectures daily, working with platforms that expose partial APIs, critical data outside contracts, and strategic information that exists only in the presentation layer. The integration doesn’t eliminate scraping; it makes extraction smarter, more secure, and infinitely more scalable.

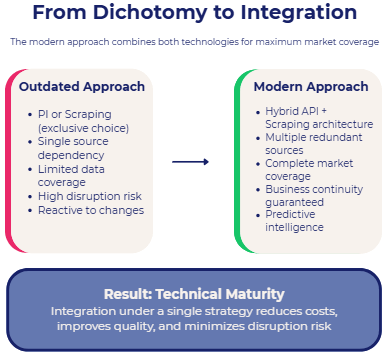

APIs and Web Scraping: From Dichotomy to Architectural Design

The Evolution Beyond “API vs. Web Scraping”

From a business perspective, the “API vs. web scraping” debate is outdated. APIs offer structured data, relative stability, and less legal friction, but they rarely expose the full informational value of a platform. Scraping, on the other hand, allows you to capture informal market signals: pricing changes, inventory turnover, dynamic content, and hidden hierarchies.

Strategic Integration for Technical Maturity

Technical maturity emerges when both approaches are integrated under a single strategy. Hybrid API scraping represents this evolution, where APIs serve as the first point of access, and scraping acts as an enrichment, validation, or fallback mechanism. This combination reduces operating costs, improves data quality, and decreases the risk of disruptions.

REST and GraphQL: Real Implications for Data Extraction

Understanding REST APIs in Enterprise Environments

REST remains dominant in legacy and enterprise environments, with well-defined endpoints, explicit versioning, and clear consumption limits. Its predictability makes it ideal for stable, long-term integrations.

GraphQL: Flexibility and Complexity

GraphQL introduces extreme flexibility: it allows dynamic queries, payload control, and selective access to fields. From a data extraction perspective, GraphQL demands greater discipline. Its power can become a risk if the volume of queries or the complexity of the schemas is not controlled.

At Scraping Pros, we have seen integrations where a poor query strategy generated more severe bottlenecks than traditional scraping. That’s why our architectures incorporate abstraction layers that decouple the business logic from the API contract, allowing for rapid adaptation to changes without affecting downstream data flows.

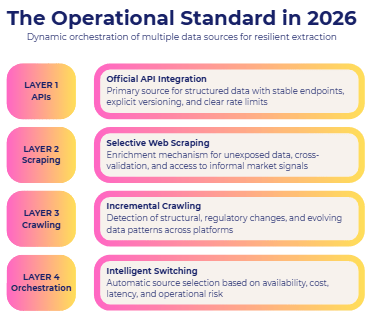

Hybrid API Scraping: The Operational Standard in 2026

What is Hybrid API Scraping?

The dominant model in 2026 is hybrid API scraping, an architecture that combines official APIs, selective scraping, and incremental crawling under a single orchestration logic. This approach allows organizations to dynamically decide how to obtain each piece of data based on availability, cost, latency, and operational risk.

At Scraping Pros, we implement this model as a data resilience strategy, where no single source becomes a single point of failure.

Main Operational Benefits

The main operational benefits of the hybrid approach include:

- Prioritized use of APIs when stable and complete endpoints exist

- Scraping as a mechanism for enrichment, cross-validation, or access to unexposed data

- Incremental crawling to detect structural or regulatory changes

- Automatic switching between sources in case of outages, contract changes, or rate limitations

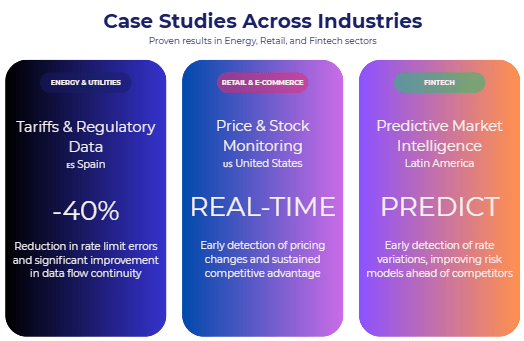

Case Study: Fintech in Latin America

Objective: To anticipate changes in credit conditions and financial offers before their official publication.

Process: Integration of public banking APIs, regulatory crawling of official bodies, and scraping of financial and commercial portals.

Result: Early detection of variations in rates and profits, improving risk models and reducing credit exposure ahead of competitors.

This type of architecture allows a shift from reactive logic to predictive market intelligence, where data not only describes the present but also anticipates the movement of the ecosystem.

Authentication, Rate Limiting, and Security in API Integrations

The Critical Role of API Security

API integration introduces specific challenges that go beyond technical access. Authentication, consumption control, and security define not only system stability but also its long-term sustainability.

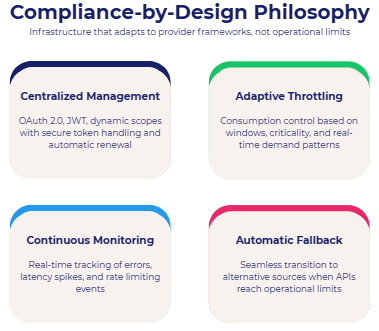

Compliance-by-Design Philosophy

Scraping Pros addresses these challenges with a compliance-by-design philosophy, where the infrastructure adapts to the framework expected by each data provider, rather than forcing operational limits.

Security Strategy Pillars

The pillars of this strategy include:

- Centralized management of credentials and tokens (OAuth 2.0, JWT, dynamic scopes)

- Adaptive throttling based on consumption windows and data criticality

- Continuous monitoring of errors, latency, and rate limiting events

- Automatic fallback to scraping or crawling when an API reaches its limit

Case Study: Energy and Utilities in Spain

Objective: To collect data on tariffs, commercial terms, and regulatory updates without operational disruptions.

Process: Integration of official APIs with adaptive rate limiting and incremental crawling of regulatory portals.

Result: 40% reduction in rate limit errors and significant improvement in the continuity of data flow for strategic analysis.

Case Study: Retail & E-commerce in the United States

Objective: Monitor prices and stock levels across multiple marketplaces in near real-time.

Process: Use of public APIs as the primary source, selective scraping of non-exposed data, and distributed serverless orchestration.

Result: Reduced time-to-insight, early detection of pricing changes, and a sustained competitive advantage in revenue management strategies.

In all cases, security is not treated as a constraint, but as an enabler of scale and trust, especially in enterprise environments with multiple regions and regulatory frameworks.

Modern Architectures: APIs, Microservices, and Serverless

Beyond Monolithic Systems

Data collection at scale can no longer rely on monoliths. Modern architectures combine APIs, scraping, and crawling within distributed ecosystems, with specialized microservices and serverless execution to handle peak demand.

Distributed Crawling Systems

At Scraping Pros, we design distributed and incremental crawling systems capable of updating large volumes of information without overloading infrastructure or violating access policies, laying the foundation for much more precise subsequent analysis.

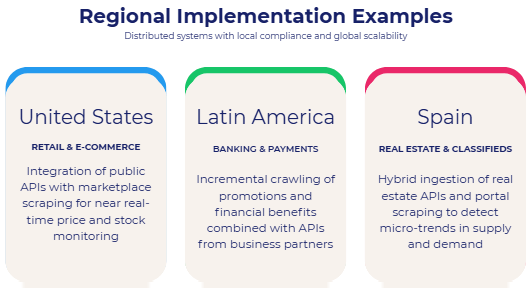

Regional Implementation Examples

Specific examples include:

- United States (Retail & E-commerce): Integration of public APIs with marketplace scraping for near real-time price and stock monitoring

- Latin America (Banking & Payments): Incremental crawling of promotions and financial benefits combined with APIs from business partners

- Spain (Real Estate & Classifieds): Hybrid ingestion of real estate APIs and portal scraping to detect micro-trends in supply and demand

Data Governance and Global Scalability

The Governance Imperative

As data sources multiply, governance becomes critical. Simply collecting data is no longer enough; it’s essential to trace its origin, version it, and audit its use. Organizations that scale without this control accumulate technical debt and regulatory risk.

Global Operations with Local Compliance

Scraping Pros integrates data lineage, version control, and source classification from the initial design stage. This allows operations across multiple regions—Latin America, Europe, and the United States—while complying with local regulations without sacrificing speed or analytical depth.

From Collection to Market Intelligence

Transforming Data into Strategic Assets

True value emerges when the extraction infrastructure becomes a business intelligence platform. APIs and scraping cease to be tactical tools and transform into strategic assets.

Predictive Market Intelligence

In all our projects, the ultimate goal is not simply “to have data,” but to anticipate market movements: detecting pricing changes, signs of expansion, inventory variations, or regulatory changes before the competition.

Conclusion: Leadership through Integration, Not Volume

The difference between collecting data and leading the market lies in the architecture. Companies that integrate APIs, crawling, and web scraping into a cohesive strategy gain a competitive advantage that’s difficult to replicate.

Scraping Pros doesn’t offer isolated tools. We design data extraction and integration infrastructures built for business intelligence with a focus on security, scalability, and the long term. In an environment where information is abundant but competitive advantage is scarce, leadership means integrating better and faster.

FAQ: APIs Integration

What is the difference between API data extraction and web scraping?

API extraction uses structured, authorized endpoints, while web scraping collects data directly from web interfaces. Enterprises often combine both for completeness.

When should companies use hybrid API scraping?

When APIs expose partial data, have rate limits, or lack historical depth, hybrid models ensure continuity and market coverage.

Is API scraping more secure than traditional web scraping?

APIs reduce certain risks, but security depends on authentication, rate limiting, and governance. Poorly designed API usage can be as risky as scraping.

How does Scraping Pros integrate APIs at enterprise scale?

Through modular architectures that combine APIs, distributed crawling, and scraping with centralized security and monitoring.

Can API rate limits impact data-driven decision making?

Yes. Without adaptive throttling and fallback strategies, rate limits can create blind spots in market intelligence.

Are APIs enough for competitive market analysis?

Rarely. The most valuable competitive signals often exist outside official APIs, requiring complementary scraping strategies.

Want expert implementation of hybrid scraping strategies?

Our enterprise team can design and implement a custom solution that combines APIs and scraping for maximum reliability and efficiency.