Introduction: Scalable Data Collection as Enterprise Infrastructure

Data collection methods have evolved from simple HTML extraction into critical enterprise infrastructure. Today, advanced data collection methods power pricing intelligence, competitive monitoring, and AI-driven decision systems across global organizations.

Web scraping is no longer a tactical tool—it’s a strategic capability. Modern data collection methods combine distributed crawling, API-first strategies, event-driven pipelines, and microservices architecture to deliver scalable, reliable data at enterprise scale.

The key shift is architectural. Rather than asking how to scrape faster, leading organizations now ask: How do we design data collection methods that scale reliably, integrate seamlessly, and evolve alongside our business?

At Scraping Pros, we witness this transformation firsthand across global deployments. The most successful implementations treat web data collection as distributed infrastructure—not as isolated scripts. When data collection methods are designed with modularity, observability, security, and resilience from day one, they become sustainable competitive advantages.

1. Why Traditional Data Collection Methods Fail at Scale

Many scraping initiatives fail not because data extraction is impossible, but because the architecture was never designed to accommodate growth. Monolithic crawlers, tightly coupled pipelines, and rigid scheduling models quickly become bottlenecks as the volume of data, the diversity of sources, and the critical nature of business increase.

As organizations expand into new markets or require fresher data, these outdated data collection methods accumulate operational debt in the form of brittle workflows, delayed updates, manual fixes, and opaque failures. What initially worked for a single team becomes unsustainable at the enterprise level.

This is where scalable web scraping diverges sharply from basic automation. Scalability isn’t just about running more scrapers; it’s about designing systems that can absorb change without constant reengineering. According to Gartner’s research on data integration, enterprises that treat data collection as infrastructure see 3x faster time-to-insight than those using ad-hoc methods.

Learn more about web scraping best practices that complement architectural planning.

2. Enterprise Data Collection Methods: Key Requirements

Enterprise data collection differs fundamentally from ad hoc scraping. It must support continuity, governance, and integration across multiple business units and geographies.

In practice, this means data collection methods must be:

- Continuous, not project-based

Data flows 24/7, supporting real-time decision-making rather than one-off reports. - Integrated, not isolated

Modern data collection methods feed directly into data warehouses, BI tools, and AI models without manual intervention. - Observable, not opaque

Teams need real-time visibility into performance, data quality, and system health. - Adaptive, not static

Sources change constantly. Effective data collection methods detect and respond to structural changes automatically.

When these conditions are met, scraping becomes a reliable producer of enterprise-grade data rather than a fragile upstream dependency. Our guide to enterprise data integration covers these principles in depth.

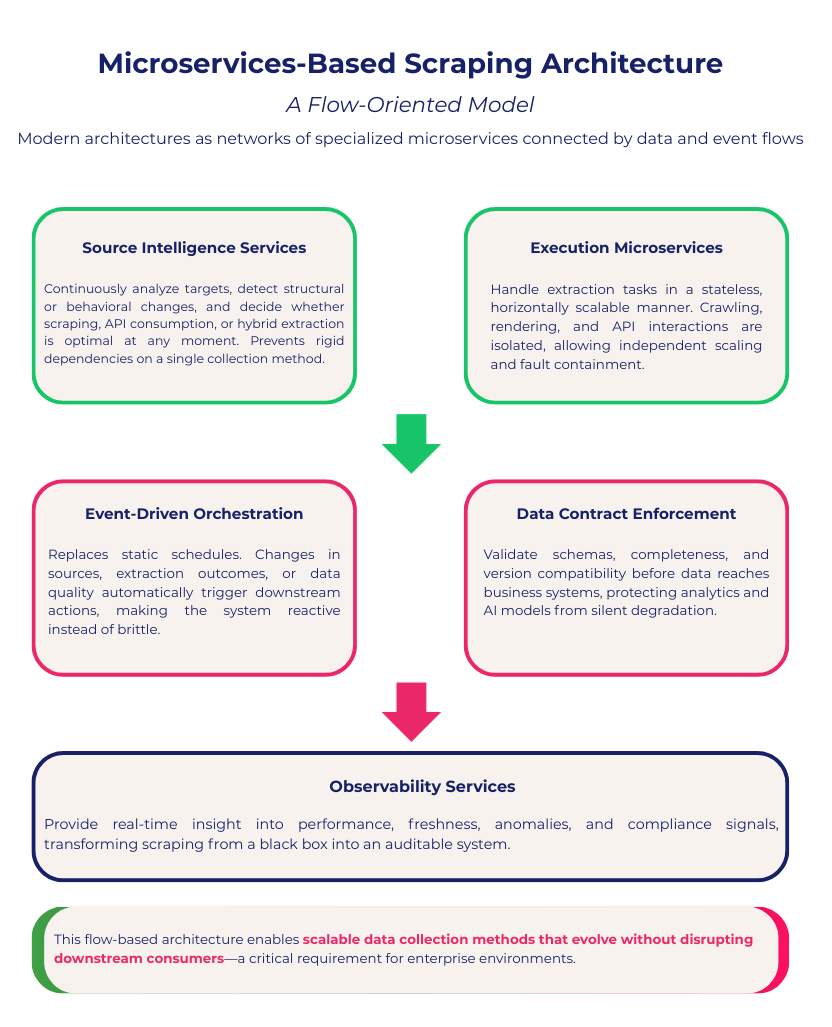

3. Advanced Data Collection Methods: Microservices Architecture

Instead of structuring scraping systems as stacked layers, modern architectures are better understood as networks of specialized microservices connected by data and event flows. This approach mirrors how scalable enterprise systems are built across finance, logistics, and cloud-native platforms, following cloud-native architecture principles.

In this model, each component focuses on a single responsibility and communicates through explicit contracts.

- Source Intelligence Services, continuously analyze targets, detect structural or behavioral changes, and decide whether scraping, API consumption, or hybrid extraction is optimal at any moment. This prevents rigid dependencies on a single collection method.

- Execution Microservices, handle extraction tasks in a stateless, horizontally scalable manner. Crawling, rendering, and API interactions are isolated, allowing independent scaling and fault containment.

- Event-Driven Orchestration, replaces static schedules. Changes in sources, extraction outcomes, or data quality automatically trigger downstream actions, making the system reactive instead of brittle.

- Data Contract Enforcement Services, validate schemas, completeness, and version compatibility before data reaches business systems, protecting analytics and AI models from silent degradation.

- Observability Services, provide real-time insight into performance, freshness, anomalies, and compliance signals, transforming scraping from a black box into an auditable system.

This flow-based architecture enables scalable data collection methods that evolve without disrupting downstream consumers—a critical requirement for enterprise environments.

For technical implementation details, see our API-first scraping guide.

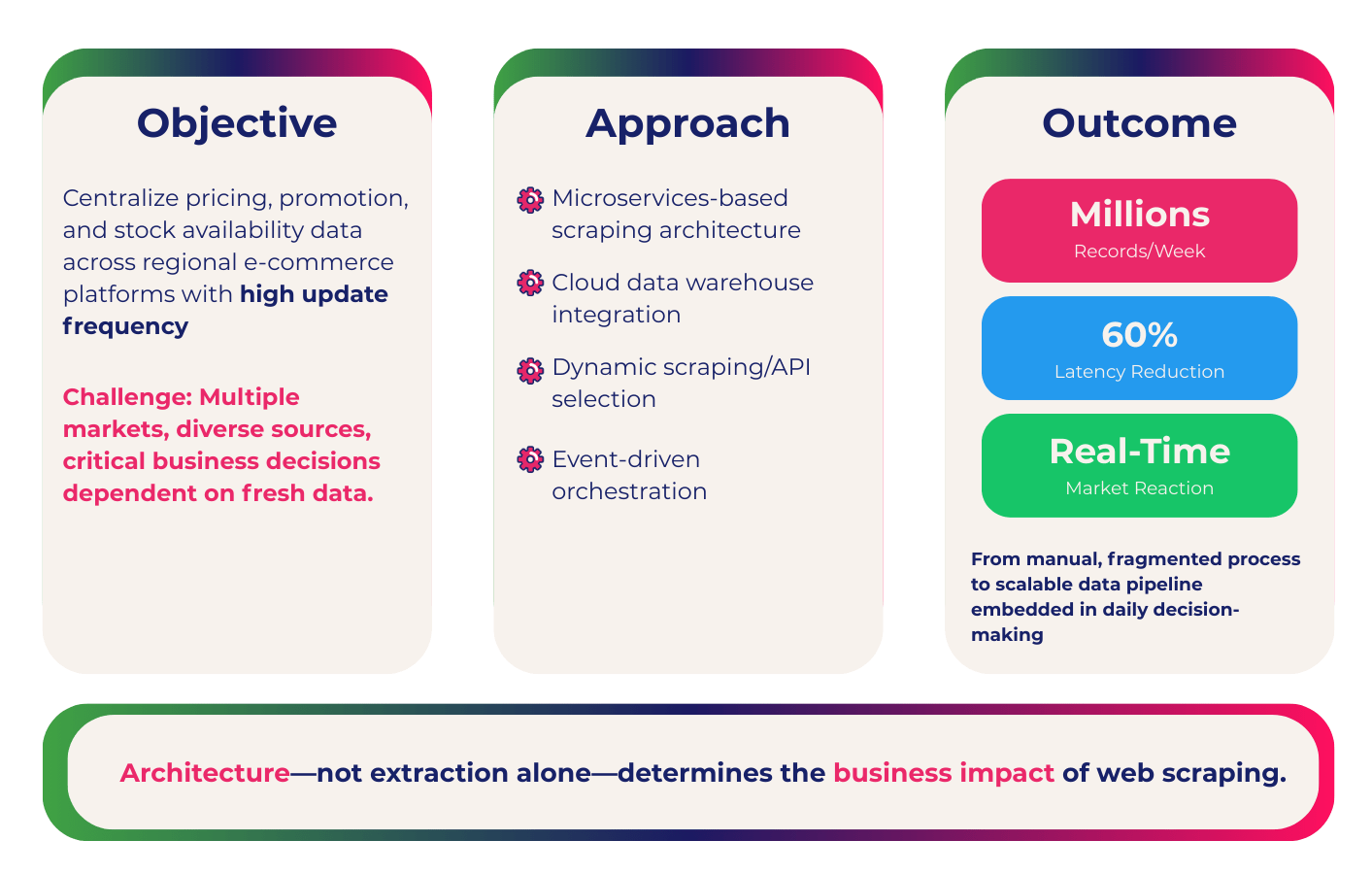

4. LATAM Success Case: Scalable Market Intelligence for Regional Retail

A large retail group operating across multiple Latin American markets partnered with Scraping Pros to modernize its competitive intelligence capabilities.

- Objective:

Centralize pricing, promotion, and stock availability data across regional e-commerce platforms with high update frequency.

- Approach:

Scraping Pros designed a microservices-based scraping architecture integrated directly with the company’s cloud data warehouse. Source intelligence services dynamically selected between scraping and API-based extraction, while event-driven orchestration ensured rapid updates without overloading target sites.

- Outcome:

The system processes millions of product records weekly, reduced update latency by more than 60%, and enabled pricing teams to react to market changes in near real time. What was once a manual, fragmented process became a scalable data pipeline embedded in daily decision-making.

This case illustrates how architecture—not extraction alone—determines the business impact of web scraping.

5. How to Evaluate Enterprise Data Collection Methods

When evaluating data collection methods for enterprise use, selecting a scraping solution requires evaluating architectural maturity, not just feature lists.

- Decoupling and modularity:

Can components evolve independently without breaking the system? - Scalability by design:

Does the architecture support granular scaling, or does growth require full redesigns? - Event-driven resilience:

How does the system respond to failures, changes, or spikes in demand? - Data quality enforcement:

Are schemas, validations, and versioning treated as first-class concerns? - Observability and governance:

Can technical and business stakeholders understand what the system is doing at any moment?

A scalable web scraping solution is ultimately judged by how predictably it delivers trustworthy data under changing conditions. Following AWS Well-Architected Framework principles, effective data collection methods prioritize reliability, performance, and operational excellence.

Review our scraping architecture checklist to evaluate your current capabilities.

6. Future of Data Collection Methods: 2026-2027 Market Outlook

According to industry data, the web scraping tools and data extraction ecosystem is expanding significantly as organizations increase their use of external data for analytics, competitive intelligence, pricing strategies, and AI workflows.

Industry analysis from Grand View Research indicates that the global web scraping software market is expected to grow steadily, reaching approximately USD 168.31 million in 2026 and USD 173.95 million in 2027. This growth reflects the sustained adoption of cloud-based scraping, automation, and integration capabilities across sectors.

This steady upward trajectory underscores how enterprises increasingly view scraping not as a niche utility but as critical infrastructure that provides real-time insights, supports predictive models, and improves decision-making systems.

Additionally, broader studies estimate that the total web scraping software market could grow at a double-digit rate over the long term (e.g., reaching multi-billion-dollar valuations by the early 2030s), which reinforces the strategic importance of scalable, resilient scraping architectures.

Enterprises that invest in modern data collection methods, such as distributed microservices, event-driven orchestration, and observability-first design, are positioning themselves not only to keep pace with this growth, but also to leverage external data as a sustainable competitive advantage.

As highlighted in Harvard Business Review’s data strategy framework, competitive advantage increasingly depends on the ability to operationalize external data at scale.

Conclusion: Building Durable Advantage Through Architecture

The evolution of web scraping reflects a broader truth about modern data systems: tools don’t scale; architectures do. Enterprises that invest in scalable web scraping architectures gain more than just operational efficiency; they also gain strategic flexibility.

Modern data collection methods require architectural thinking. When implemented correctly, these methods transform web data from a tactical resource into a strategic asset that powers pricing decisions, market intelligence, and AI-driven insights.

At Scraping Pros, we design data collection systems that seamlessly integrate into enterprise environments, reliably operate at scale, and evolve alongside businesses. By aligning advanced data collection methods with modern microservices principles, we help organizations transform web data into a sustainable competitive advantage, both globally and confidently.

Contact our team to discuss custom scraping solutions for your enterprise.

Frequently Asked Questions (FAQs)

What are advanced data collection methods in web scraping?

Advanced data collection methods go beyond basic HTML extraction. They combine distributed crawling, API-first strategies, event-driven pipelines, and scalable architectures to reliably collect, process, and integrate web data into enterprise systems.

What is scalable web scraping and why does it matter for enterprises?

Scalable web scraping refers to the ability to increase data volume, frequency, and source complexity without redesigning the system or exponentially increasing costs. For enterprises, scalability is critical to support growth, real-time analytics, and consistent data quality across regions and markets.

How does a microservices web scraping architecture work?

A microservices web scraping architecture breaks the scraping system into independent services—such as extraction, scheduling, transformation, and delivery—that communicate via events or APIs. This approach improves resilience, enables parallel scaling, and allows teams to update components without disrupting the entire platform.

What is the difference between a scraping tool and an enterprise scraping platform?

A scraping tool focuses on extracting data from websites. An enterprise scraping platform integrates extraction into the broader data ecosystem, including orchestration, governance, observability, security, and direct integration with data lakes, warehouses, and analytics systems.

How do distributed scraping systems improve reliability?

Distributed scraping systems spread workloads across multiple nodes and regions, reducing single points of failure. They allow incremental updates, intelligent retries, and adaptive behavior when target sites change, ensuring stable data pipelines even at large scale.

Can web scraping be integrated with existing enterprise data pipelines?

Yes. Modern enterprise data collection platforms are designed to integrate natively with existing pipelines using APIs, message queues, and cloud-native services. This enables scraped data to flow directly into BI tools, pricing engines, and AI models without manual intervention.

What role does observability play in enterprise web scraping?

Observability provides visibility into scraper performance, data quality, failures, and system health. Metrics, logs, and alerts allow teams to detect issues early, ensure SLA compliance, and maintain trust in data used for critical business decisions.

Is web scraping compliant with enterprise security and governance standards?

When designed correctly, enterprise web scraping can fully align with security and governance requirements. This includes access controls, audit logs, data lineage, and compliance with regional regulations, making scraping a governed data source rather than an unmanaged risk.

How should companies evaluate scalable data collection methods for enterprise use?

Evaluation should focus on architectural flexibility, integration capabilities, scalability under real workloads, resilience to change, and governance features. The key question is not how much data can be scraped, but how effectively that data can be operationalized.

Why is scalable web scraping becoming critical infrastructure toward 2026–2027?

As market data, pricing intelligence, and real-time signals become central to competitive strategy, enterprises increasingly rely on external web data. Industry projections show sustained market growth, reinforcing that scalable scraping architectures are becoming a core component of modern data infrastructure.