The 2025 Data Scraping Revolution: AI Intelligence Meets Enterprise Scale

Over the past few years, data scraping has evolved from a technical support tool into a strategic source of business intelligence. By 2025, leading organizations in the global market—from Latin American startups to European corporations—will no longer simply collect data: they will interpret, predict, and transform it into automated decisions.

This shift is driven by a decisive combination: artificial intelligence (AI), machine learning (ML), and advanced scraping automation. The result is a new discipline that Scraping Pros calls Intelligent Data Mining, where every line of code learns from its environment, optimizes its behavior, and scales seamlessly to petabyte-sized volumes.

While structured data (SQL databases, traditional APIs) stabilizes, unstructured data—dynamic HTML, social media content, IoT, metaverses, and marketplaces—multiplies exponentially. In this scenario, intelligent data extraction becomes the true engine of competitive advantage.

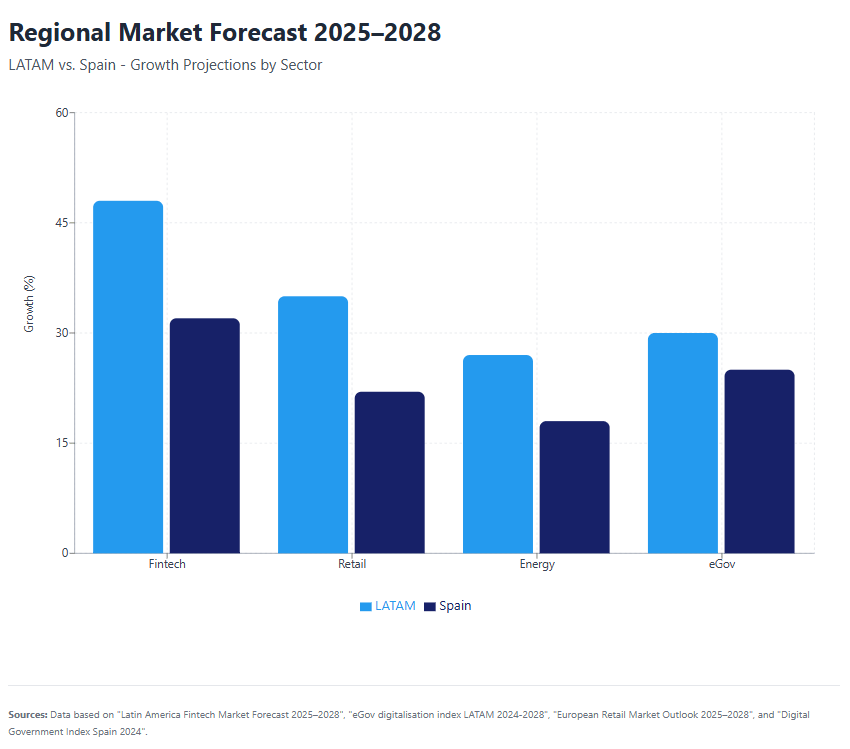

Scraping Pros has a strong operational presence in Latin America and Spain and has been part of this transformation, helping companies in various sectors redesign their relationship with data and accelerate their analytical maturity.

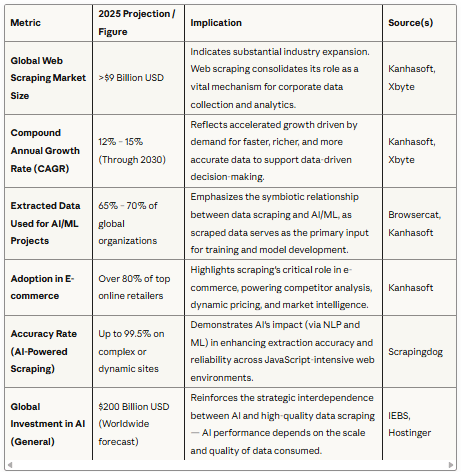

The global web scraping market is projected to reach $1.7 billion by 2027, growing at 13.8% CAGR, driven primarily by AI-powered web scraping innovations and enterprise demand for real-time competitive intelligence.

.

.

Emerging Data Scraping Trends Reshaping 2025

2025 is shaping up to be the year when data scraping will cease to be perceived as an auxiliary technique and will be considered a distributed cognitive system. The key web scraping trends point to three revolutionary areas:

Cognitive Automation: The Brain Behind Modern Data Scraping

New scraping automation frameworks not only identify tags or DOM structures: they learn contextually. This means that a scraper can recognize that “price,” “offer,” or “rate” represent the same economic entity, regardless of language or format.

Fintech Argentina Case Study: A regional fintech company implemented a cognitive scraping system to monitor active loan rates and online credit offers. The model not only extracted the data but also detected semantic changes and alerted risk teams when a competitor modified its commercial policy.

Edge Scraping and Decentralized Processing

As data scraping grows toward petabyte scale, processing everything in the cloud becomes costly and inefficient. By 2025, industry leaders will be moving some processing to the edge (edge computing). This allows intelligent agents to run on nodes closer to the source, reducing latency and bandwidth consumption.

Retail Mexico Case Study: An appliance retail chain deployed micro-agents across different regions of the country to collect real-time competitor pricing. 70% of data normalization was performed directly at the edge, achieving a 42% reduction in transfer costs.

AI Orchestration and Autonomous Pipelines

The most advanced companies are integrating AI scraping tools based orchestrators. These systems dynamically allocate resources, select the appropriate extraction model based on the site type, and apply self-healing algorithms when they detect blocks or CAPTCHAs.

Startup Spain Case Study: A market intelligence startup implemented an autonomous pipeline for online pharmaceutical product tracking. Each scraper evaluated its own performance and retrained its local model if accuracy fell below 95%. In three months, they managed to scale from 1 million to 18 million pages per week without interruption.

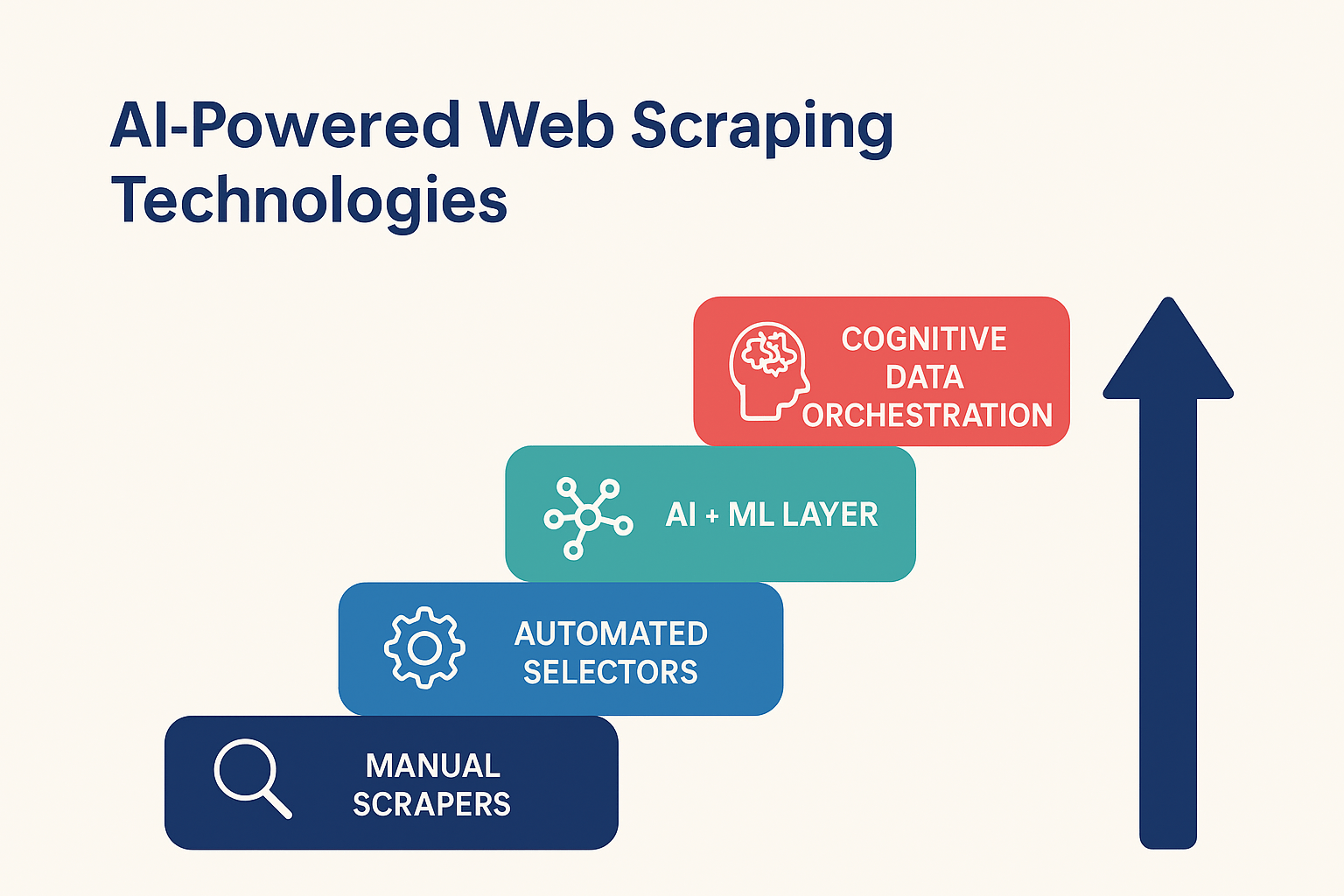

AI-Powered Web Scraping Technologies Driving Innovation

The most notable leap in 2025 comes from the fusion of machine learning and traditional data scraping. This paradigm allows companies to move from simple extract-transform-load (ETL) to extract-learn-act, where each extraction cycle feeds a continuous learning process.

Predictive and Adaptive Models

One of the classic challenges of web scraping trends has been script breakage when a site’s structure changes. With AI data extraction, this ceases to be an operational problem and becomes a learning opportunity.

Modern systems apply transformers trained in HTML and visual context to anticipate layout changes, identify equivalencies, and maintain scraping continuity without manual intervention.

Tourism Platform Success Story: A Buenos Aires-based platform integrated a predictive model to analyze historical patterns on booking sites. The model anticipated price tag changes up to 72 hours in advance, reducing maintenance time by 80%.

Semantic Extraction and Contextual Classification

Thanks to language models, AI scraping tools now understand the meaning of data. They can distinguish between “base price,” “promotion,” or “taxable rate” without explicit rules.

This enables scenarios where intelligent data mining acts as an automated analyst, classifying and validating information before ingestion.

Spain Media Analysis: A media consultancy applied contextual extraction to analyze press headlines and detect political polarization biases. The tool identified linguistic patterns and emotional tones, transforming what was previously raw text into actionable data for communications teams.

Architectures Scalable to Petabyte Level

Scraping Pros has worked with architectures that manage billions of records daily. At a technical level, this involves designing distributed pipelines, dynamically balancing nodes, and implementing advanced data compression strategies.

The challenge is not only technical: scaling to petabyte scale requires operational discipline and cost optimization. Cost and efficiency models are calibrated based on cost per request and throughput latency, always seeking to maintain ROI above 30% annually.

Enterprise Scraping Automation Future: The Business Intelligence Core

Corporations have understood that data scraping is not an isolated function of the technical area, but a core component of the business intelligence system. The enterprise scraping future points toward complete integration with decision-making ecosystems.

Integration with BI Ecosystems and MLOps

The most effective scraping automation solutions connect pipelines directly with analytics environments such as Power BI, Snowflake, or Databricks.

This transforms web scraping trends into an automated input for the decision layer, where dashboards no longer display historical data, but rather real-time predictive signals.

Telecommunications Argentina: Data extracted on competitor plan offers and prices was automatically integrated into the dynamic pricing model. In six weeks, the system had adjusted rates and campaigns based on detected elasticity, achieving a 17% increase in new customer acquisition.

Automating Competitive Intelligence

In Spain, a company in the agri-food sector deployed a competitive scraping engine that tracks more than 400 B2B portals in Europe. AI data extraction classifies supply and demand patterns, estimates price elasticities, and alerts sales teams when it detects opportunities.

The result: Market analysis cycle reduced from 10 days to 2 hours, with an estimated ROI of 38% in the first year.

Data Governance and Compliance

Automation does not absolve companies of responsibility. That’s why leaders in enterprise scraping implement proactive compliance mechanisms, audit logs, and request anonymization.

Scraping Pros integrates policy compliance modules that guarantee traceability and compliance with GDPR in Europe and Habeas Data in Latin America, reinforcing trust and operational security.

Petabyte-Scale Challenge: When Data Never Sleeps

Working at petabyte scale means navigating an ecosystem where volume exceeds human monitoring capacity. A modern enterprise data scraping project can process more than 50 million pages daily, equivalent to 2 PB of processed data per month.

Distributed Infrastructure and Orchestration

To maintain efficiency at this scale, Scraping Pros uses microservices architectures with intelligent load balancers and redundant nodes. Each cluster acts as an autonomous unit, managing queues, errors, and priorities, with real-time dashboard monitoring.

Logistics Chile: A company applied this approach to combine IoT fleet signals with scraped weather and traffic data. Logistics prediction models optimized routes and reduced delivery times by 27%.

Compression, Archiving, and Continuous Learning

Modern intelligent data mining doesn’t delete the data it has already processed; it recycles it. Through semantic compression, the models identify repetitive patterns and store only the significant differences.

This allows for maintaining historical databases equivalent to 50 PB in just 4 PB of effective storage, reducing costs and improving longitudinal learning capacity.

Performance and Sustainability Monitoring

Petabyte scraping is not only a technical challenge but also an energy one. The most responsible organizations prioritize computational efficiency, minimize duplication, and employ asynchronous processing.

Scraping Pros develops its own Data Efficiency Index metrics, which measure the energy impact per request and the carbon footprint per dataset.

The Human-AI Synergy: The Human Factor in Automation

Despite the advancement of cognitive scraping, humans remain essential. AI scraping tools require contextual validation, ethical oversight, and strategic calibration.

Scraping Pros operates with specialized human teams that review extraction samples, correct biases, and adjust learning parameters. This synergy ensures not only technical accuracy but also business relevance: data should be used to inform decisions, not just to fill a data lake.

Fintech Peru Example: A company used a hybrid pipeline where human analysts verified anomalies detected by the model. This allowed them to identify regulatory inconsistencies in 0.03% of the data and avoid penalties for inaccurate information.

The Path Forward: Autonomous and Intelligent Enterprise Scraping

Data scraping is entering a new phase: operational autonomy and continuous learning.

Key developments shaping the enterprise scraping future:

- Pipelines can now detect failures, reconfigure themselves, and scale without intervention

- Proliferation of self-orchestrated systems combining contextual intelligence, semantic insight, and time prediction

- The goal transcends simple extraction: building an intelligent extraction ecosystem that evolves with the digital environment

For businesses across any industry, this means a competitive leap: accessing clean, contextual, and predictive data in real time without relying on external integrations.

Scraping Pros: Engineering the Future of Data Intelligence

2025 marks the beginning of a new era: that of intelligent scraping at enterprise scale. Data has become the most valuable asset, but only those who can capture, structure, and act on it accurately will dominate their market.

At Scraping Pros, we understand that the future is not just about extracting information, but about understanding it before everyone else.

Our teams combine AI data extraction, distributed scraping automation, and human expertise to transform digital chaos into measurable business decisions.

“In the next decade, companies won’t compete to have more data, but to have the right data at the right time.”— Research Team, Scraping Pros

Ready to Transform Your Data Strategy?

Schedule a free scraping audit and discover how to scale your intelligent extraction strategy by 2025.

FAQs – Data Scraping Trends 2025

What is intelligent data scraping?

Intelligent data mining is the evolution of traditional web scraping toward AI-based models that learn from context, correct errors, and adapt their behavior without manual intervention. It combines machine learning, natural language processing, and autonomous decision-making.

How are companies applying AI-powered scraping automation?

In Latin America, sectors like fintech, retail, and logistics use cognitive scraping for price monitoring, risk detection, and market analysis. In Spain, it’s applied to competitive intelligence and media analysis. AI scraping tools enable real-time data extraction with predictive capabilities.

What are the advantages of scaling scraping to the petabyte level?

Petabyte-scale data scraping allows integration of multiple global sources in real time, generation of predictive insights, and maintenance of complete historical data for training ML models without loss of granularity. It enables enterprise-wide intelligence previously impossible.

What differentiates traditional scraping from AI-driven scraping?

Traditional web scraping relies on fixed rules and breaks when sites change. AI data extraction is adaptable, semantic, and self-regulating, which reduces maintenance by up to 80% and increases resilience to structural changes through continuous learning.

How does Scraping Pros guarantee quality and regulatory compliance?

Through automated audits, request anonymization, GDPR and Habeas Data compliance modules, and strategic human review to ensure data accuracy and ethical practices. Our enterprise scraping future approach prioritizes governance alongside performance.

What industries benefit most from web scraping trends in 2025?

E-commerce, financial services, real estate, travel, healthcare, logistics, and market research lead adoption. Any data-driven organization competing on price intelligence, market timing, or competitive analysis benefits from modern scraping automation.

How much does enterprise-level AI scraping cost?

Investment varies based on scale, complexity, and data volume. Most enterprises see positive ROI within 6-12 months. Scraping Pros offers customized pricing with performance guarantees and scalable architectures that grow with your needs.